Yesterday, I had to privilege to attend a meetup on Istio, presented by Dan Ciruli, the PM for Istio at Google. It was a great presentation on an interesting topic; and I wanted to share my take-aways from the meetup via this blog post. What follows is (after my own introduction to the topic) mainly a report of the talk itself, and credit to all ideas to go Dan Ciruli.

Kubernetes is rapidly becoming the new deployment platform for new applications. All major public cloud providers offer a managed kubernetes service, while various other companies offer either their own distribution of Kubernetes, or offer a level of service on top of the open source project. I personally started delving into the topic middle last year, and have quickly learned that the technology is rapidly evolving.

One of those evolutions – although arguably not kubernetes specific – is introduction of service meshes. A service mesh is an infrastructure component that helps managing inter-service communication. Istio is an open source implementation of a such a service mesh.

Before delving into the topic itself, I wanted to make 2 book recommendations that help position the technology better:

- Site Reliability Engineering, edited by Betsy Beyer, Chris Jones, Jennifer Petoff and Niall Richard Murphy. This book was mentioned by Dan Ciruli during his presentation. I’ve enjoyed reading this book myself, as it helps understanding the complexities and potential strategies in running planet-scale distributes applications. You can read it online for free here

- Designing Distributed Systems, by Brendan Burns. This quiet short ebook introduces multiple microservices design patterns for distributed systems; with a focus on deploying these patterns on Kubernetes. In it, Brandan explains for instance what a side-car is. Free download available here

The reason why I attended this meetup is that I want to learn more about container networking. More on my discovery plans in the conclusion of this post – but if you want to learn more about this well, let me know and I’ll let you follow along is my discovery.

But for now, let’s jump into Istio:

A service mesh looks like a service bus, but is a different beast

If you look at what how I described a service mesh in my intro (‘an infrastructure component that helps managing inter-service communication’), you might think it is actually the same as a service bus. Although both offer similar functionality, the way they offer it is different. The main difference between a bus and a mesh is that the former requires you put a message on the bus while the latter allows you to transparently call the service you want to call.

A service bus traditionally requires you to use client libraries in your application to put a message on the bus. Looking at the Azure Service Bus, there are specific SDKs for .NET, Java, Node.js that allow you to push/pull messages to/from our service bus.

A service mesh on the other hand is transparent for your application. Just like HTTP made it transparent to make API calls – a service mesh makes it transparent to handle inter service communication. For the developer, it feels like the mesh is integrated into the networking stack.

So, why use a service mesh?

During his talk, Dan mentioned a couple of reasons to use a service mesh. The common denominator of these reasons is the complexity that comes with using microservices: troubleshooting / deployment / separating apps from infra / decoupling ops from development.

Microservices offer a number of benefits for application development and operations. You can now have multiple “two-pizza teams” working independently on their part of the application (their microservice) and deploy their service independently. Their service can scale independently, only dependent on its actual load and becomes an isolated fault domain.

Having your application logic spread across multiple microservices, with each microservice running multiple instances makes troubleshooting a lot harder. With the monolith you could login to the machine and browse the logs files and look at CPU/MEM counters. What do you do when your microservices application has become slow to respond? How do you pinpoint errors? How do you identify the main sources of traffic?

Deploying your monolith used to be a complex task, but it remained a single, manageable and plannable task. Deploying a microservice is less complex than deploying a full monolith, but introduces other challenges. How do you perform A/B testing? How do you perform canary releases? How do you monitor impact on other services?

Logic such as retries and circuit breaking should not be part of an application. Imagine 4 microservices each implementing their own (potentially language specific) logic of retries/circuit breaking. Why not extract out those functionalities and offer them uniformly from the ops team.

In comes Istio, a service mesh. Or rather an open services platform

Istio is more than just a service mesh. It is a full open services platform. Its design principle was to build a highly modular and pluggable platform. More about where you can plug when we discuss Istio’s architecture.

Istio has 3 value propositions: Observability – Control – Security.

Observability means Istio gives you clear visibility into the interservice communication. The main goal for observability is to give you golden signals about your service, namely traffic, error rates and latency. Once you have these uniform metrics – also called service level indicators – you can start to set service level objectives (check out the SRE book mentioned in the intro for more info on how Google deals with this). Istio logs every call and takes a trace of representative amount of calls. With this data, you can get a clear view into service interdependencies and create a (sometimes shocking) service graph.

Istio also wants to give you greater control on interservice traffic. It offers you configurability into retry policies, circuit breaking, rate limiting(*)… Istio allows you to roll-out a new version of your application while closely monitoring and controlling traffic rates. You could create canary releases by only sending 1% of traffic to the new service – you could create an internal preview by only sending traffic from employees and not external customers to a new service. With the golden signals you get from the first point discussed, you can make sure your new service doesn’t break your application.

(*) Maarten Balliauw has a great talk on rate limiting. You can find upcoming dates here and there is a recording available here.

Finally, Istio helps to make your interservice communication more secure; without impacting your application itself. Istio makes it straightforward to setup mTLS between your services, so every call is mutually authenticated (not required though). If you don’t know about mTLS (mutual TLS), read up on it here: Mutual authentication. Furthermore, you can define policies that govern which services can talk to which services. All of this is transparent because you transparently deploy a sidecar with each pod that handles the authentication/authorization/traffic control.

Istio architecture

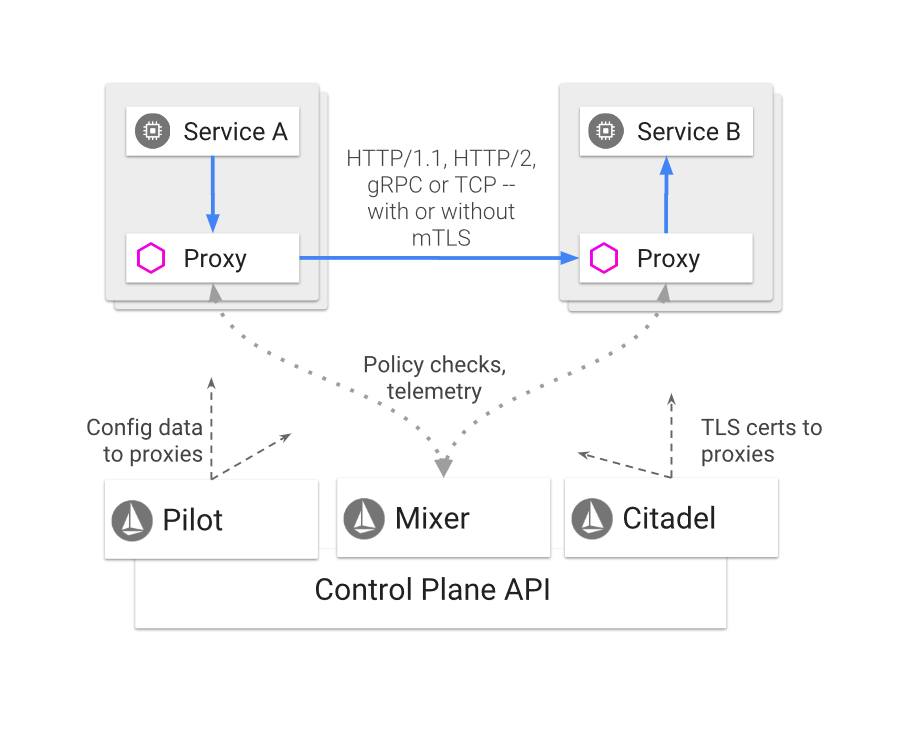

(pictures below are taken from: https://istio.io/docs/concepts/what-is-istio/ )

Istio’s architecture is made up of 4 components: Citadel, which acts a certificate authority – (Envoy?(*)) Proxies, which handles all traffic – Pilot, which pushes configuration data to the proxies – and Mixer which is the pluggable policy enforcer and telemetry gateway.

(*) Not a discussion during the talk itself, but I introduce a short discussion on it at the end of this section.

Citadel is the CA authority that will streamline the mTLS between services. mTLS is optional, but it wasn’t clear to me if Citadel itself is a required component for Istio or not.

Pilot is the component that provides service discovery and pushes configuration data to the proxies. It contains the high level traffic routing rules. Pilot can integrate with Kubernetes, Consul, Eureka or Nomad for the actual service discovery.

One interesting nugget that was shared is that the Istio platform does client side load balancing. If a destination service has multiple endpoints, Pilot will provide these multiple endpoints to the proxy requesting the info and the proxy will do client side load balancing. The (Envoy) proxies will consequently do health checking from the client side, and take unhealthy destinations out of load balancing rotation. More on this interesting pattern here.

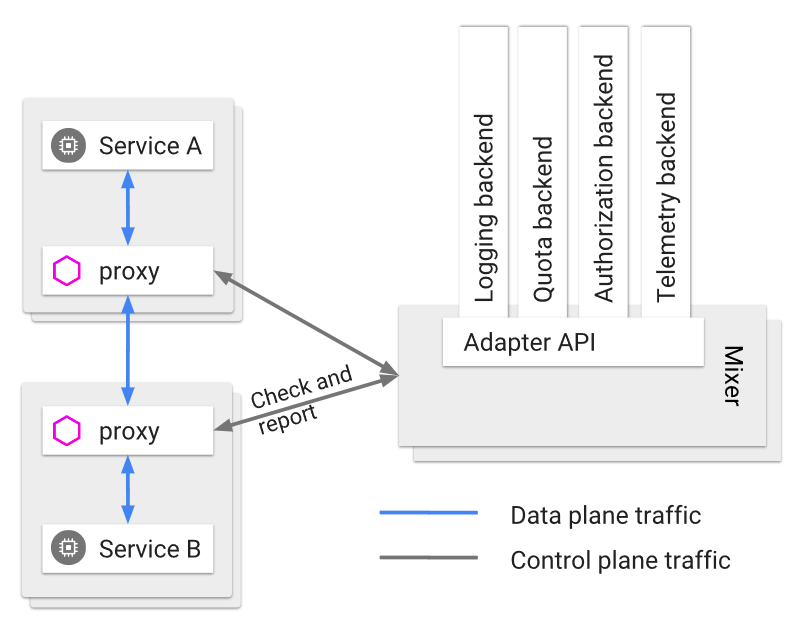

Mixer is the enforcer of access control and policies. It is a pluggable platform, that has adapters for logging, quota, authorization and telemetry. Mixer provides a uniform abstraction between multiple backend systems. When performing a policy check – there are two levels of caching to avoid overloading the backend systems. First and foremost, the Envoy proxies heavily cache the authorization requests; and Mixer itself is designed as well to cache most requests. You wouldn’t want every call to result in a backend authorization check.

I remember 2 interesting nuggets that were shared around mixer: (1) Due to the heavy caching of policies, Mixer typically only introduces additional latency on the 99th percentile. (2) Active directory is a popular policy engine. I however couldn’t find any documentation on Istio integration with AD; so I don’t know if it actually possible to integrate Istio with AD.

The proxy is the actual heart of the Istio service mesh. The proxies are deployed as sidecars to your pods, and are the only components actually in the data plane traffic (Citadel/Mixer/Pilot are not in the actual traffic flow). The proxies are configured via Pilot, perform policy checks to Mixer and provide telemetry and logging to Mixer.

The Istio documentation is pretty clear that these proxies should be Envoy, although I’ve read some blogs/articles by Nginx that nginx could be used as an Istio proxy. I even read somewhere that we should expect proxy discussions to come up, much like we had the discussions on Kubernetes vs Swarm vs Mesos. I personally have no clue and no opinion on where this is going to go, but I found it interesting to read about the proxy discussion from Nginx and not hear a word about other proxies than Envoy at the meetup. Check out for instance this talk summary by Nginx.

Kubernetes native, not Kubernetes only

Istio is a Kubernetes native technology. It can integrate with bare-metal / virtual machines, as long as the apps deployed on those are integrated with a side car proxy. You do however still need a Kubernetes API server to handle the YAML configuration of Istio. Dan recommended to actually run the Istio control plane on Kubernetes; but it is not required. Obviously, Istio can integrate with servcies outside of the mesh itself as well, but then you don’t have all the controls you have with services that are in the mesh.

Conclusion

I haven’t had the chance to play with Istio (yet), but it does sound interesting. The whole concept of a service mesh adds value to and takes complexity out of a microservices deployment. Being able to control retries, circuit breaking, A/B testing, canary in a consistent way outside of the actual application makes a lot of sense. You just do the service call (let it be REST or gRPC), and the service mesh will take care of the rest.

Where I personally struggle in my knowledge in the container space are the networking concepts. Questions like “how does Istio fit in with CNI / Calico / Flannel” or “how do you handle multi region networking correctly with containers/Kubernetes” are things I want to delve into further. I was planning to research and publish my findings about container networking in a couple of posts; and would certainly like your input if you’d be interested to follow along with my discovery. Let me know!