A while ago I wrote about a self-destructing VM. The process was completely self-contained, and the VM deletes itself. This was a nice demo – but not too useful in my day-to-day, where I create demo’s of all kinds of different resources.

In my case it’d be a lot better to have a daily automation script that would clean up after me. Kind of like a Roomba for Azure.

Let’s think this through, think about what is required for this, and then build this out.

Architecture

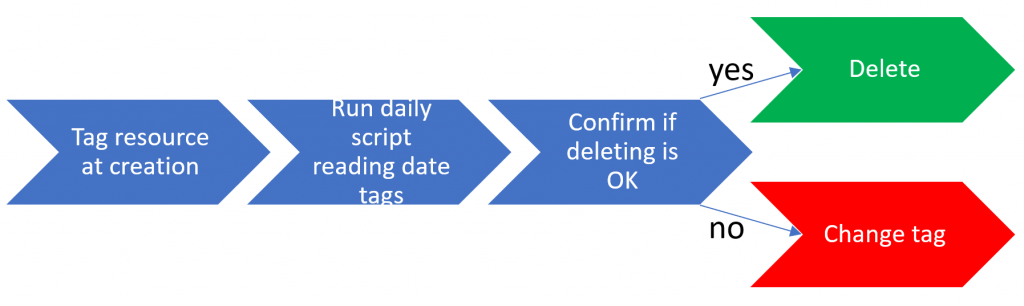

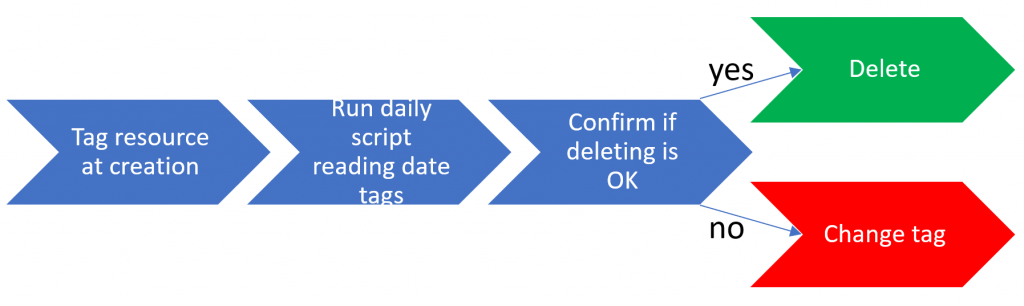

From a timeline perspective, this would have to look something like this:

Looking at this from right to left, this means we would need a system that asks for confirmation prior to deleting, that either deletes the resources or changes the tag value to a future date.

This confirmation is triggered from a daily script that reads the tags, and triggers the confirmation email if the “to delete by” date is in the past.

Finally, we need a (preferably) automated way to tag our resources with a date that we want them to be deleted by.

Reasonably speaking, I see two options of “things” we can delete, either a full resource group or actual individual resources. As I hate too many confirmations, I will create my workflow based on the resource groups rather than the resources themselves.

So, thinking about options for the implementation, these are the options I see:

- Tagging: Manual tagging – Azure Policy auto-tagging

- Daily script: Azure Automation – Logic Apps – Functions

- Confirmation: Logic Apps

My choice for the end-to-end falls on policy auto-tagging + Azure automation + Logic Apps. Why? I picked auto-tagging because I’m lazy and I’ll probably forget tagging my new resource groups. I picked Azure automation for checking the tags, as this is an easy environment to schedule recurring tasks, and it supports code-based development, which will give me easy ways to parse dates. I picked Logic Apps for the confirmation, because that mechanism is built in.

The only thing that worries me right now, is auto-tagging resources with a “to delete by”-date. I’m thinking of putting logic in place that tags resources to be deleted “next week”. The automation script and the logic app have me less worried. So, why don’t we get started with the hardest task: tagging resources with a future date.

Auto-tagging resources

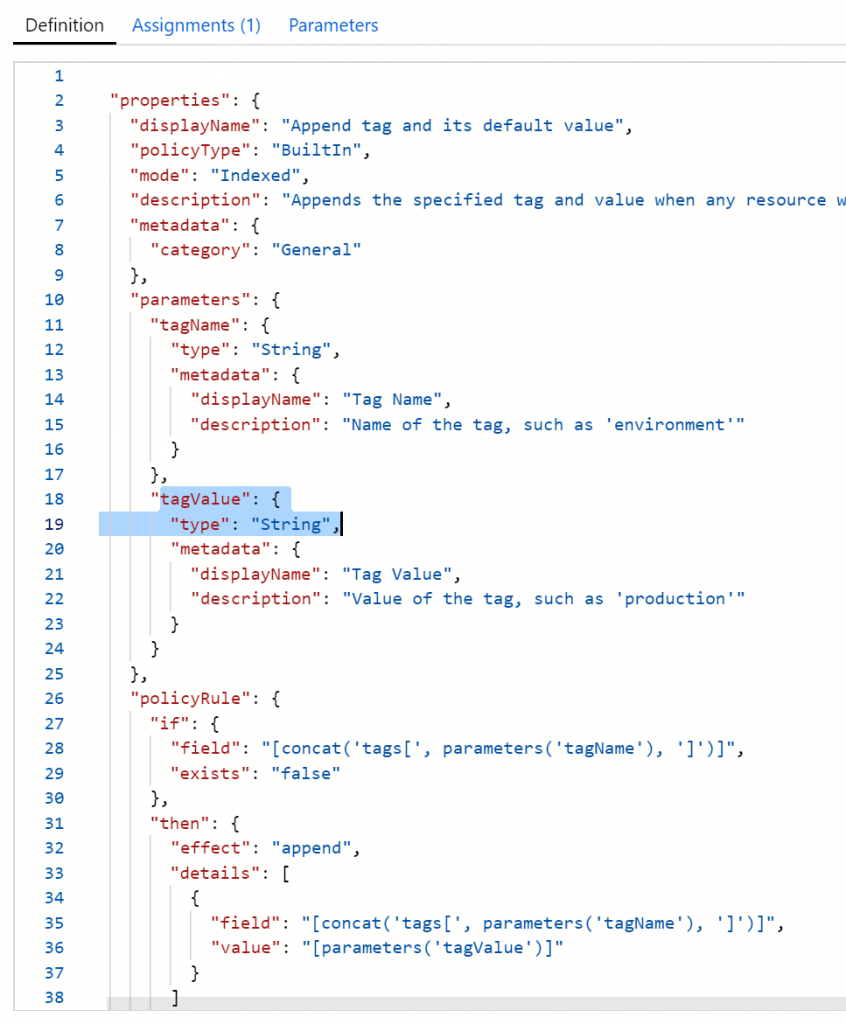

Auto-tagging itself is quiet straightforward. There is a default Azure Policy, that will append a tag and set it’s default value. However, this default value by default is a hard-coded string:

I stumbled upon this blog, which adds a CreatedOnDay tag. That’s nice, because that brings us halfway there! IF (big IF) Azure Policy allows arithmetic functions on dates, we’ll be all set. Let’s try this out.

First things first. have a look at the documentation of this function. We have the ability to format this more nicely, which I’ll do. Next, the ARM language has a function to do additions, but it requires two integers as inputs. I highly doubt this will work, but let’s give it a swing. My policy now looks something like this:

{

"mode": "All",

"policyRule": {

"if": {

"allOf": [

{

"field": "tags['CreatedOnDate']",

"exists": "false"

},

{

"field": "type",

"equals": "Microsoft.Resources/subscriptions/resourceGroups"

}

]

},

"then": {

"effect": "append",

"details": [

{

"field": "tags['CreatedOnDate']",

"value": "[utcNow('d')]"

},

{

"field": "tags['DeleteByDate']",

"value": "[add(utcNow('d'),7)]"

}

]

}

},

"parameters": {}

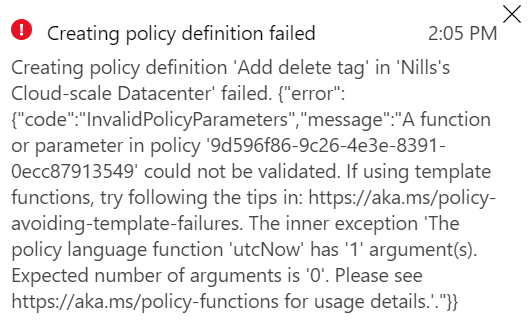

}Let’s give this a spin. And giving it a spin, gave me an immediate error:

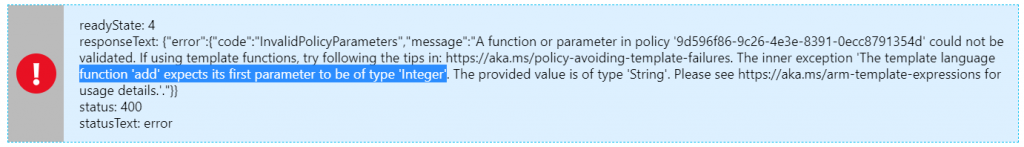

Which means, the pretty print of the utcNow() apparently doesn’t work in a policy definition. I can live with that. Updating my policy to just have utcNow() – I get the error I was expecting, that the add doesn’t support adding to our date, which is a string:

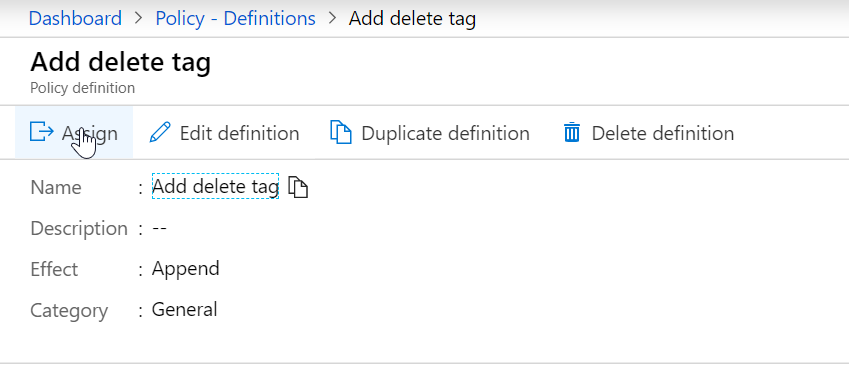

So we’ll remove the DeleteByDate for now, but keep the CreatedOnDate in place. This works. Just for reference, this is the policy I put in place:

{

"mode": "All",

"policyRule": {

"if": {

"allOf": [

{

"field": "tags['CreatedOnDate']",

"exists": "false"

},

{

"field": "type",

"equals": "Microsoft.Resources/subscriptions/resourceGroups"

}

]

},

"then": {

"effect": "append",

"details": [

{

"field": "tags['CreatedOnDate']",

"value": "[utcNow('d')]"

}

]

}

},

"parameters": {}

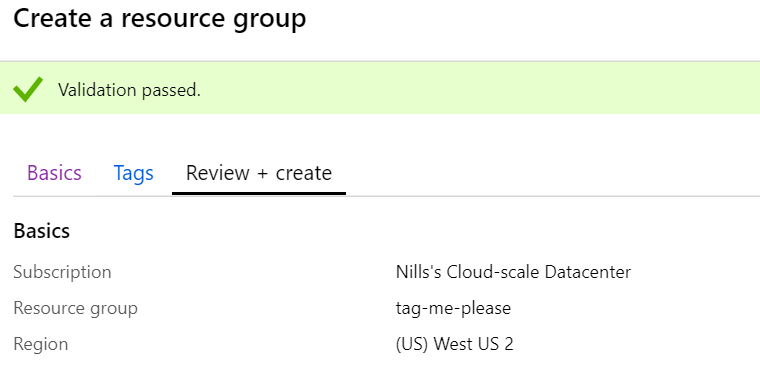

}If we now create a new resource group – we should see it tagged with the current date.

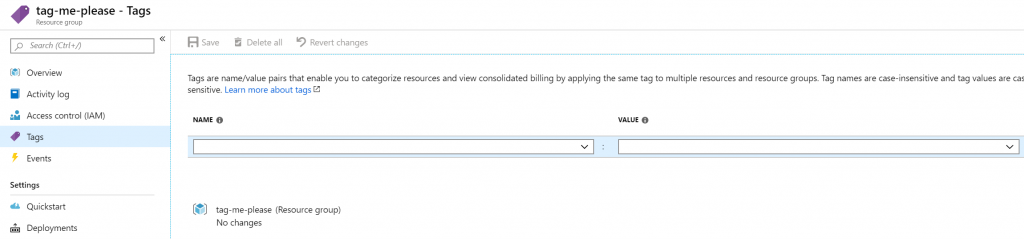

Going into tags for my resource group, I don’t see any tags. I clearly forgot something.

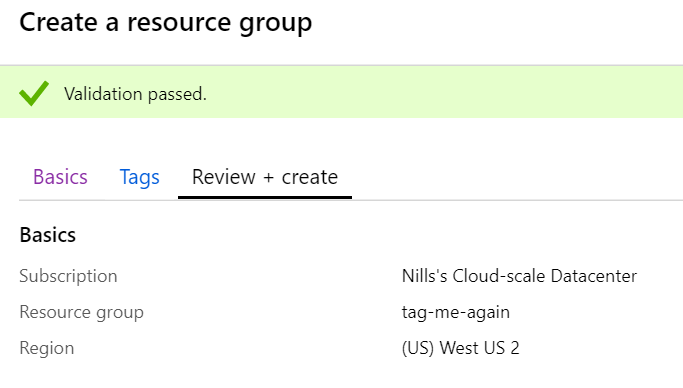

What I did forget was to create a policy assignment as well. We only created the definition, not the assignment. Let’s do this and create a new resource group.

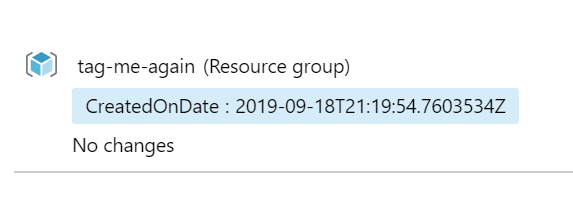

And, drumroll please…

We have a tag now. However, we don’t have our DeleteBy tag yet. We can solve for this with an Alert rule, that trigger an Automation runbook to set the tag.

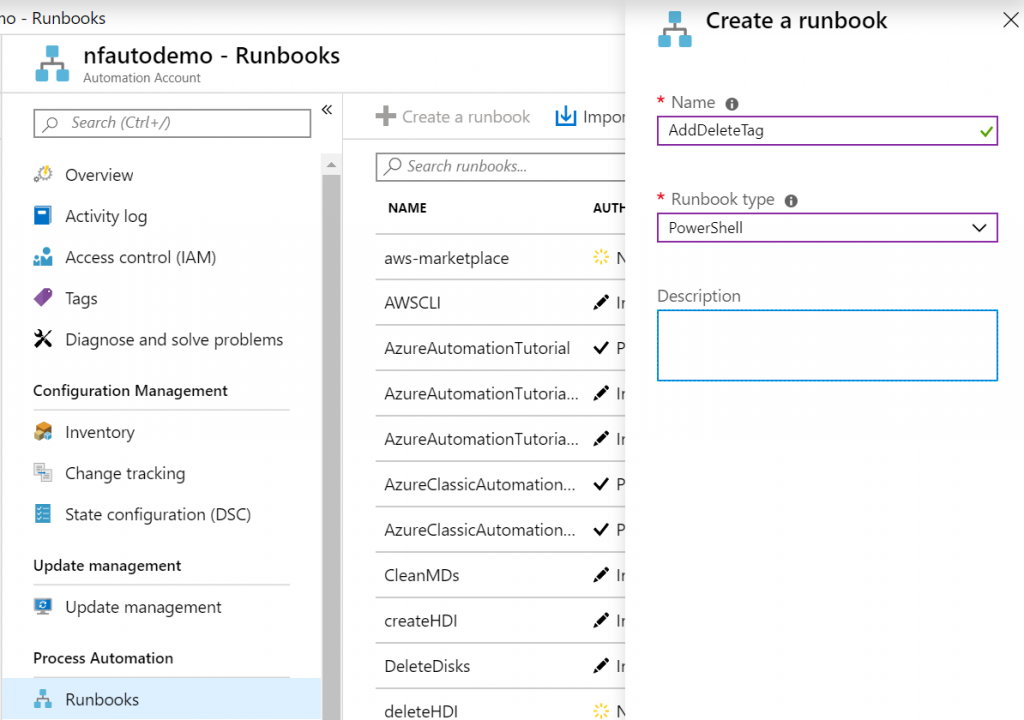

Let’s first create an empty runbook.

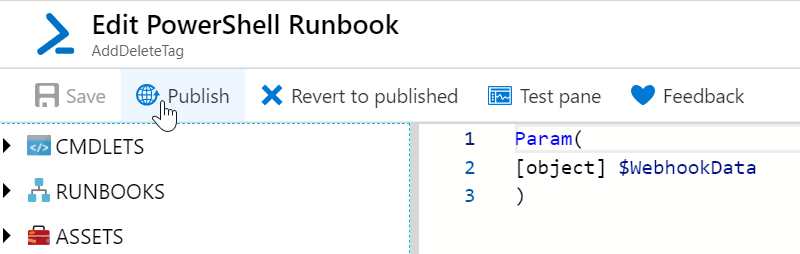

Let’s create our runbook later, but make sure it takes in the parameter that the alert rule will send to it. This would look like this. Save and publish this runbook.

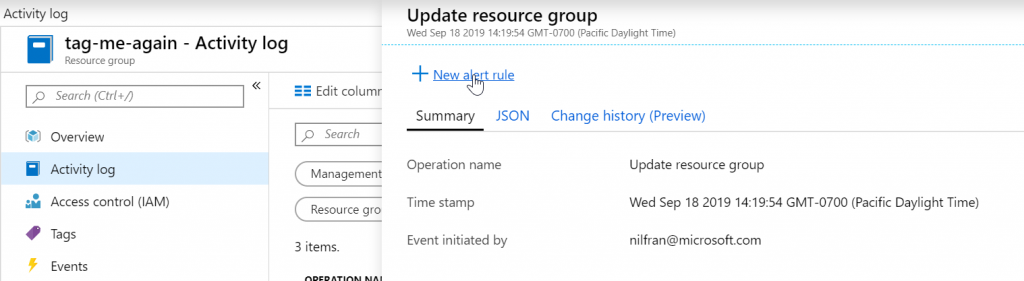

Afterwards, let’s create an alert rule for every time a resource group is created. To create this alert rule, head over to the resource group we just created, hit the activity log, and expand the update activity. You should see a link that allows you to create an alert rule:

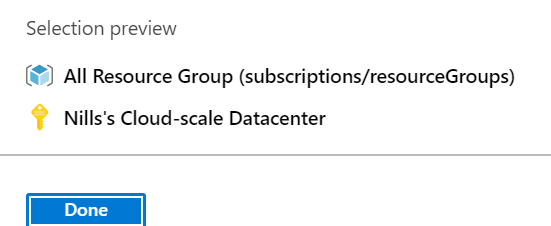

In the alert rule, change the scope to your full subscription:

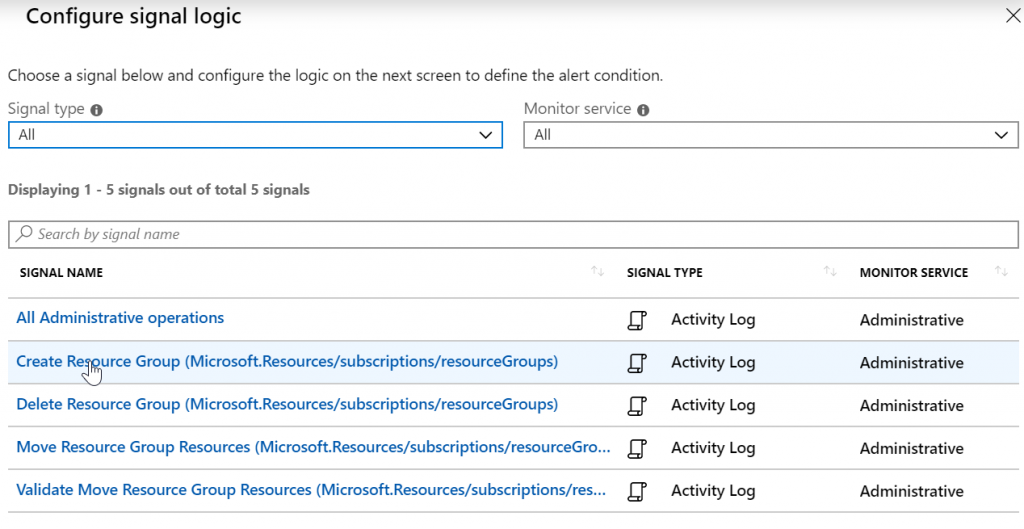

Change the condition to ‘create resource group’:

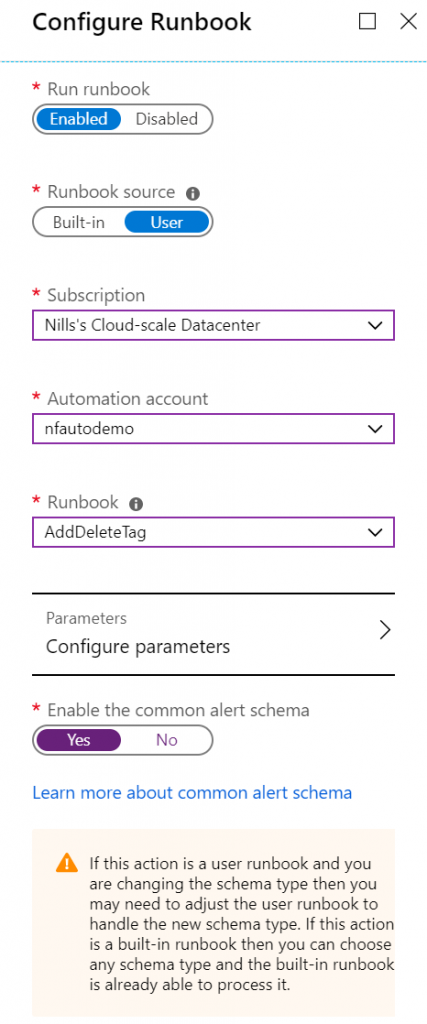

Then, create a new action group, which will trigger an Automation runbook:

Give all of this some names, and create the alert rule:

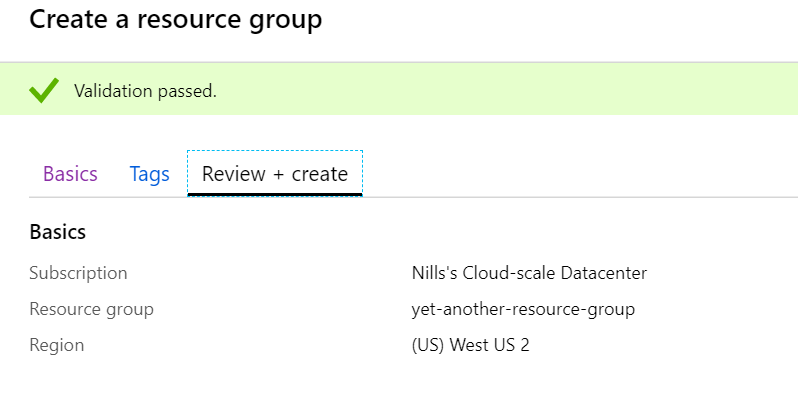

Let’s test if our rule works as expected, by creating yet another resource group. (btw. it can take up to 5 minutes for the rule to become active, so be patient).

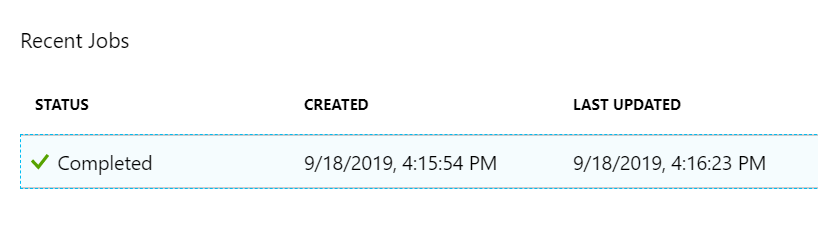

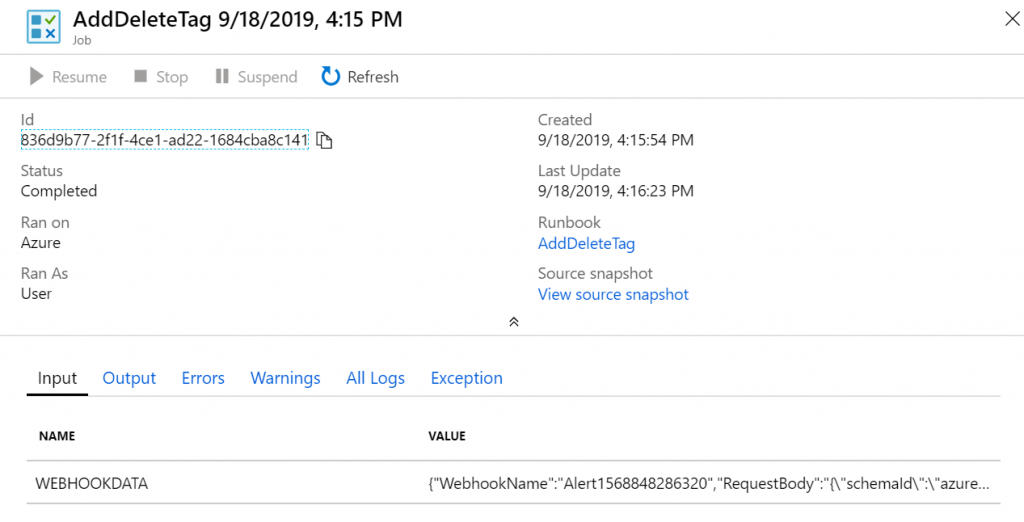

If you head on over to your automation runbook – and you can check whether you got the correct data into your runbook.

With that done, we can start developing our script to grab the created date, and add an additional tag with the to-delete-by date.

I don’t want to bother you with writing the script, this is what I ended up with that will add the tag to my resource groups:

Param(

[object] $WebhookData

)

$connectionName = "AzureRunAsConnection"

try

{

# Get the connection "AzureRunAsConnection "

$servicePrincipalConnection=Get-AutomationConnection -Name $connectionName

"Logging in to Azure..."

Add-AzureRmAccount `

-ServicePrincipal `

-TenantId $servicePrincipalConnection.TenantId `

-ApplicationId $servicePrincipalConnection.ApplicationId `

-CertificateThumbprint $servicePrincipalConnection.CertificateThumbprint

}

catch {

if (!$servicePrincipalConnection)

{

$ErrorMessage = "Connection $connectionName not found."

throw $ErrorMessage

} else{

Write-Error -Message $_.Exception

throw $_.Exception

}

}

$WebhookBody = (ConvertFrom-Json -InputObject $WebhookData.RequestBody)

$rgid = $WebhookBody.data.essentials.alertTargetIDs[0]

$rg = Get-AzureRmResourceGroup -Id $rgid

$tags=$rg.Tags

if (-not $tags.Item("deleteByDate"))

{

$createdon = $tags.Item("CreatedOnDate")

$date=[datetime]$createdon

$deleteday=$date.AddDays(7)

$tags += @{deleteByDate=$deleteday}

Set-AzureRmResourceGroup -Id $rgid -Tag $tags

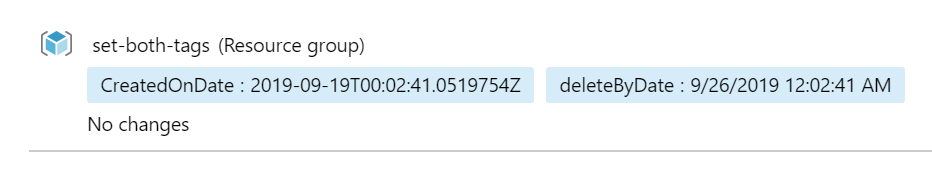

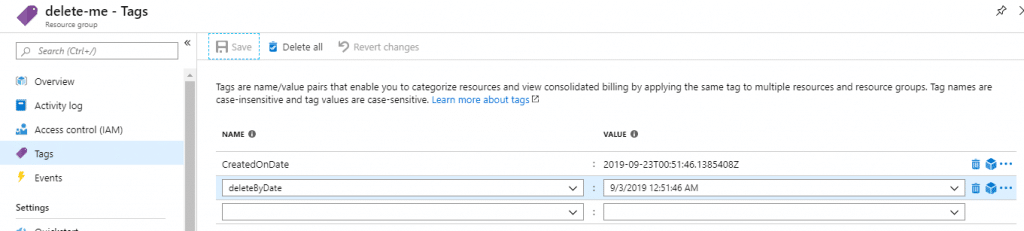

}With that code in the runbook, saved and published, we should be able to create a new resource group and get it tagged with a deleteByDate tag.

Summary of creating a deleteByDate tag

I hope you were all able to follow along with what we did here. We are using Azure Policy to add a CreatedOnDate tag. I don’t have to set this manually, this happens automatically through Azure Policy. Afterwards, we’re using an Alert Rule in combination with an Automation runbook to generate the deleteByDate tag. With that out of the way, we should be able to create another runbook, that will run daily, to check which resource groups should be deleted.

Adding a daily runbook to check which resources should be deleted

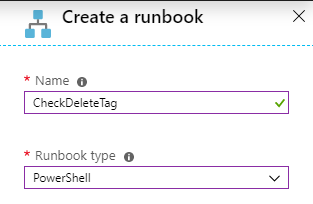

Next up, we’ll create a runbook that will check which resource groups need to be deleted. Thinking about this – we could integrate this in the Logic App we’ll build next for the approval workflow. As we’re going to be parsing dates, I believe this is best done in code rather than the graphical Logic Apps designer. Plus, writing this in PowerShell won’t take long.

Let’s go ahead and create a new Runbook:

The following code should do the trick of triggering a logic app that will delete all to-be-deleted resource groups:

$connectionName = "AzureRunAsConnection"

try

{

# Get the connection "AzureRunAsConnection "

$servicePrincipalConnection=Get-AutomationConnection -Name $connectionName

"Logging in to Azure..."

Add-AzureRmAccount `

-ServicePrincipal `

-TenantId $servicePrincipalConnection.TenantId `

-ApplicationId $servicePrincipalConnection.ApplicationId `

-CertificateThumbprint $servicePrincipalConnection.CertificateThumbprint

}

catch {

if (!$servicePrincipalConnection)

{

$ErrorMessage = "Connection $connectionName not found."

throw $ErrorMessage

} else{

Write-Error -Message $_.Exception

throw $_.Exception

}

}

$today=get-date

$rgs = get-azurermresourcegroup

foreach ($rg in $rgs) {

$tags = $rg.Tags

if ($tags){

if ($tags.Contains("deleteByDate")){

$date = [datetime]$tags.item("deleteByDate")

if ($date -lt $today){

$rgname = $rg.ResourceGroupName

write-output "We'll be deleting ""$rgname"""

#call logic app

}

}

}

}Adding approval workflow through Logic Apps

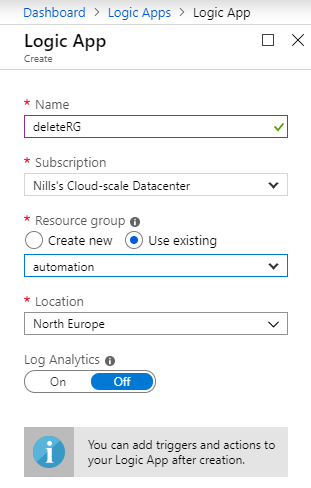

Now rests us the task of creating a Logic App with the approval workflow built-in. Let do that. In the Azure portal, we’ll create a new Logic App.

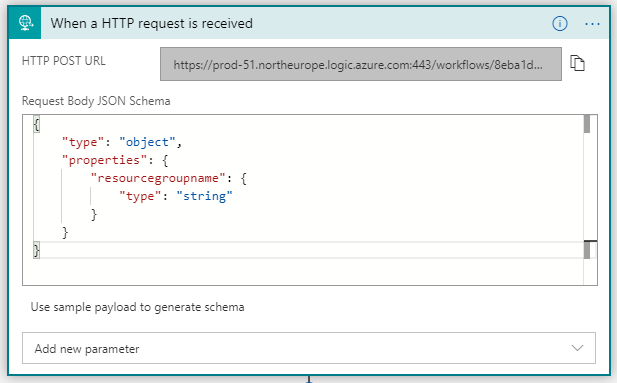

Once in the designer, we’ll start with a blank Logic App, and start with the HTTP trigger. If you do not want to figure out the JSON schema yourself, you can use the sample payload to generate the schema.

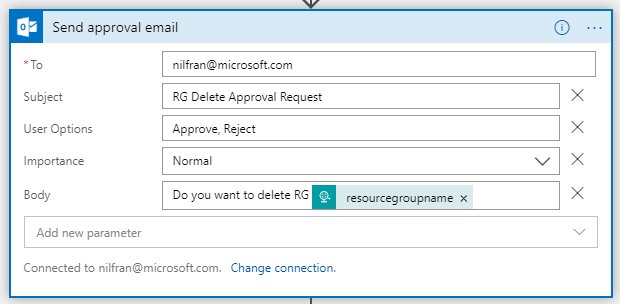

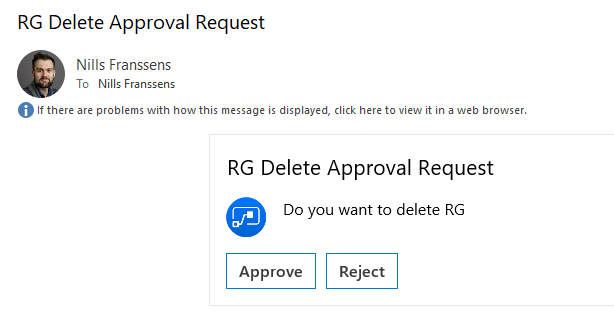

Next, we’ll add an approval e-mail step. Search for that action, select it, and then pair your office 365 mail account.

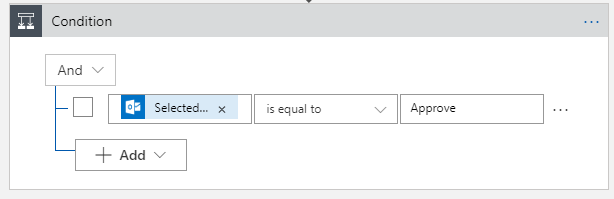

Afterwards, we’ll create a condition. This will create a branch, to either delete of do nothing.

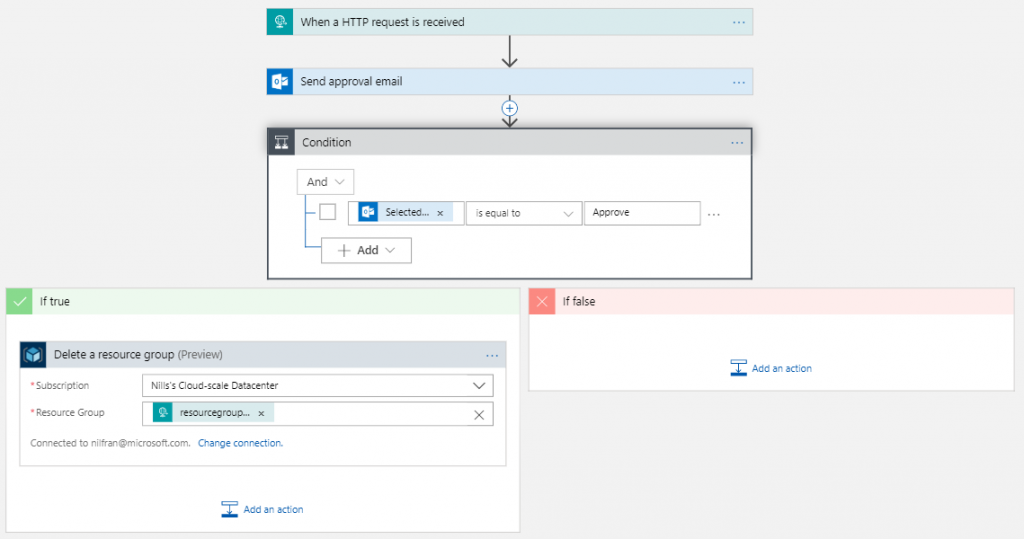

Finally, in our true branch, we’ll create a step to delete a Resource Group. This should make our end-to-end Logic App look like this:

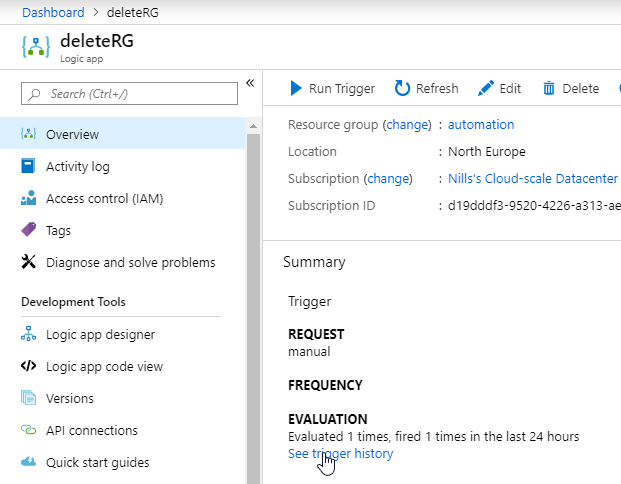

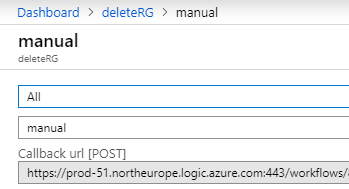

Let’s try out our Logic App in isolation first. I’ll use an app called PostMan (or any other API testing tool you want to use) to try out our Logic App. Once you saved the logic app, you should have gotten a URL in the first step. This is the URL we’ll need to do our HTTP POST against, but it is not the full url. It doesn’t contain authentication info and an API-version. To get the actual URL, head over to the main blade of your logic app and hit trigger history. This shows you the full url:

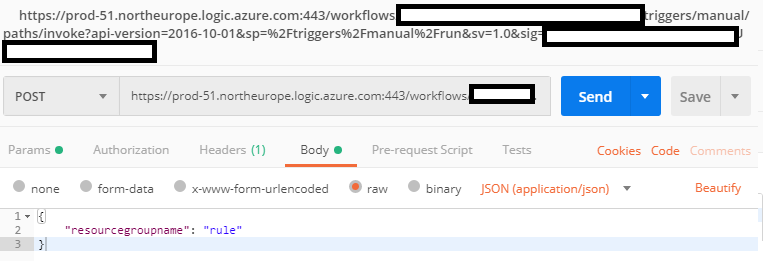

So, we’ll copy paste this URL into postman, and then add a body. That body should be raw, of type JSON. Put a resource group name in there that may be safely deleted.

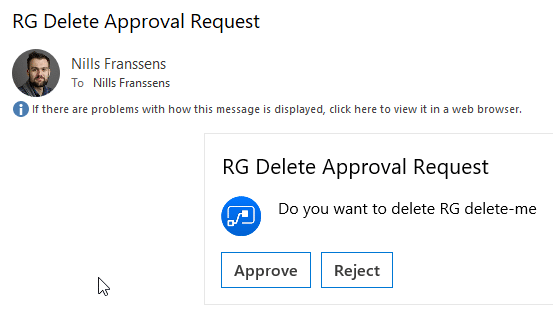

This will send us an approval e-mail. Once we hit approve, we should see our Logic App finish and delete our RG:

This now has successfully deleted our resource group. Let’s now make our automation runbook of the previous step complete by adding in the code to call our Logic App. I’ve added the full code of the Runbook again below:

$connectionName = "AzureRunAsConnection"

try

{

# Get the connection "AzureRunAsConnection "

$servicePrincipalConnection=Get-AutomationConnection -Name $connectionName

"Logging in to Azure..."

Add-AzureRmAccount `

-ServicePrincipal `

-TenantId $servicePrincipalConnection.TenantId `

-ApplicationId $servicePrincipalConnection.ApplicationId `

-CertificateThumbprint $servicePrincipalConnection.CertificateThumbprint

}

catch {

if (!$servicePrincipalConnection)

{

$ErrorMessage = "Connection $connectionName not found."

throw $ErrorMessage

} else{

Write-Error -Message $_.Exception

throw $_.Exception

}

}

$today=get-date

$rgs = get-azurermresourcegroup

foreach ($rg in $rgs) {

$tags = $rg.Tags

if ($tags){

if ($tags.Contains("deleteByDate")){

$date = [datetime]$tags.item("deleteByDate")

if ($date -lt $today){

$rgname = $rg.ResourceGroupName

write-output "We'll be deleting ""$rgname"""

$url = "https://prod-51.northeurope.logic.azure.com:443/workflows/8eba1db6ea5140eaa1bf7d7c227aa318/triggers/manual/paths/invoke?api-version=2016-10-01&sp=%2Ftriggers%2Fmanual%2Frun&sv=1.0&sig=qvQk1RyWOPc3w43ZGRE9R9qUdw9_EUhe8xzRZwacO9s"

$body = "{""resourcegroupname"":""$rgname""}"

Invoke-WebRequest -Uri $url -ContentType "application/json" -Method POST -Body $body -Headers $header -UseBasicParsing

}

}

}

}Let’s test this end-to-end now. For this, we’ll create a new resource group, and wait about 2 minutes for it to receive it’s DeleteByDate tag. We’ll go ahead and manually edit that tag to a date in the past, so we can test our Automation / Logic App integration:

With that done, let’s trigger out Automation runbook, which will call our Logic App. Just hit start on the runbook, and wait for about a minute for it to finish. And sure enough, I got my approval e-mail in my inbox a minute later:

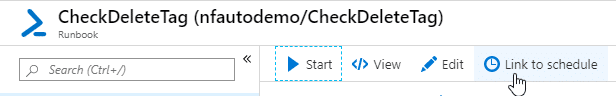

Final thing that rests us to do, is tie up this automation runbook with a schedule, so it will quietly run in the background every day.

I decided to let it run at night at 19:00.

And this should do it.

Summary

As there was a bit involved in creating this automation end-to-end, let’s review our end-to-end workflow to see if we kept this:

We first created a policy + automation runbook to tag our resources with a delete date. Then we created a combination of a automation runbook with a Logic App to check those tags and send approval e-mails whether or not we wanted to delete them.

The goal of all of this was to create an automated process to delete test infrastructure. It was a bit more involved than I had initially thought, but I’m fairly happy with the end result.