Introduction

I recently played around with kubernetes NetworkPolicies while writing part 7 of my CKAD series. I hit some configurations that didn’t make sense at all. Because I was learning about NetworkPolicies, I thought it was just me; but it appears it might have been an Azure issue. That being said, if you want to follow my troubleshooting and me doubting myself – go ahead and follow along. If you prefer to not learn the wrong things, but prefer to just learn about NetworkPolicies, head on over the part 7 in the CKAD series.

The NetworkPolicy experiment

If you are planning – like me – to run this example on an Azure Kubernetes Cluster, make sure your cluster is enabled for Network Policies. They aren’t by default. You’ll want to add the --networkpolicy azure flag to your az aks create. It cannot be applied after a cluster has already been created.

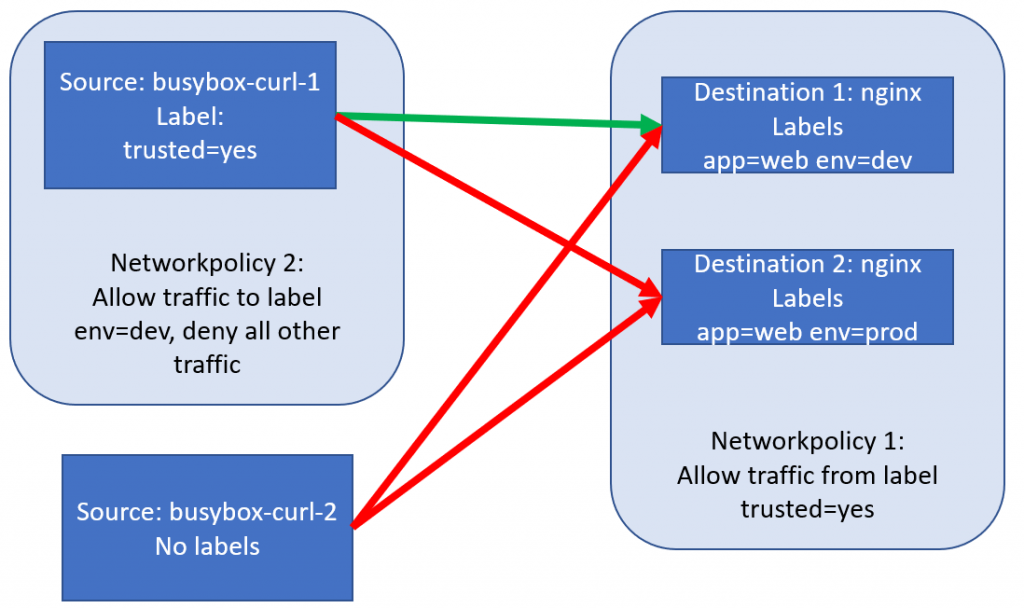

Let’s build a simple example to demonstrate how NetworkPolicies work. We’ll create 4 pods within the same namespace. 2 busybox-curl pods, 1 with a trusted label, 1 without a trusted label and 2 nginx web servers, both with label app=web, and 1 with label env=dev and the other with label env=prod.

Now, I’m going to take you on a learning experience. I was thinking that the policies defined above would have the effect I drew above. THAT IS WRONG.

Let’s create a new namespace for our networking work, and set it as the default for our kubectl:

kubectl create ns networking

kubectl config set-context $(kubectl config current-context) --namespace=networkingBy now I expect you’d be able to create the YAML for these pods, so try to write this yourself before checking mine:

apiVersion: v1

kind: Pod

metadata:

name: busybox-curl-1

labels:

trusted: "yes"

spec:

containers:

- name: busybox

image: yauritux/busybox-curl

---

apiVersion: v1

kind: Pod

metadata:

name: busybox-curl-2

spec:

containers:

- name: busybox

image: yauritux/busybox-curl

---

apiVersion: v1

kind: Pod

metadata:

name: nginx-dev

labels:

app: "web"

env: "dev"

spec:

containers:

- name: nginx

image: nginx

---

apiVersion: v1

kind: Pod

metadata:

name: nginx-prod

labels:

app: "web"

env: "prod"

spec:

containers:

- name: nginx

image: nginxWe can create this with kubectl create -f pods.yaml. Let’s get the IPs of our Nginx servers – and try if we can connect to both from both our busyboxes:

kubectl get pods -o wide #remember the ip addresses of both your nginx boxes

kubectl exec -it busybox-curl-1 sh

curl *ip1*

curl *ip2*

#both curls should give you the nginx default page

exit #to go to our next busybox

kubectl exec -it busybox-curl-2 sh

curl *ip1*

curl *ip2*

#both curls should give you the nginx default pageIf your results are the same as mine, all traffic flows, from both busyboxes to both nginx servers.

Let’s now define our first networkpolicy, which will allow traffic only from busyboxes with the label trusted=true. This will look like this:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: policy1-allow-trusted-busybox

spec:

podSelector:

matchLabels:

app: web

policyTypes:

- Ingress

ingress:

- from:

- podSelector:

matchLabels:

trusted: "yes"We can deploy this policy with kubectl create -f policy1.yaml. Creating this policy has no impact on the running pods (they remain up and running) but will impact network network almost instantly. Let’s test it out:

kubectl get pods -o wide #remember the ip addresses of both your nginx boxes

kubectl exec -it busybox-curl-1 sh

curl *ip1*

curl *ip2*

#both curls should give you the nginx default page

exit #to go to our next busybox

kubectl exec -it busybox-curl-2 sh

curl *ip1*

curl *ip2*

#these two curls should fail, as the busybox-curl-2 doesn't have the trusted labelLet’s now also deploy our second policy, which will limit egress traffic from our trusted busybox, only to the dev environment. This policy looks like this:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: policy2-allow-to-dev

spec:

podSelector:

matchLabels:

trusted: "yes"

policyTypes:

- Egress

egress:

- to:

- podSelector:

matchLabels:

env: devLet’s create this policy as well, and see what the effect is. kubectl create -f policy2.yaml

kubectl get pods -o wide #remember the ip addresses of both your nginx boxes

kubectl exec -it busybox-curl-1 sh

curl *nginxdev* #yes it still works

curl *ip2* #oh wait, this still works?I was expecting traffic to flow to our dev Nginx (what is allowed) but not to our prod nginx (because it was not explicitly allowed on the busybox). But that network flow still worked. So I started to doubt my policy, but my policy worked great, as I wasn’t able to ping my busybox-curl-2. Let me show you:

kubectl get pods -o wide #remember busybox-2 ip adress

kubectl exec -it busybox-curl-1 sh

ping *busybox-2 ip* #ping fails

exit #exit out of container

kubectl delete -f policy2.yaml

kubectl exec -it busybox-curl-1 sh

ping *busybox-2 ip* #ping worksOk, I’m getting confused at this point. Is my demo broken, or is my understanding broken? Let’s create a 3th policy, which according to the kubernetes documentation blocks all egress traffic in a cluster.

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny

spec:

podSelector: {}

policyTypes:

- Egresskubectl create -f denyall.yaml will create our denyall. Let’s try some network flows:

kubectl get pods -o wide #to get some IPs

kubectl exec -it busybox-curl-1 sh

ping *busybox2* #fails

curl *nginxdev* #works

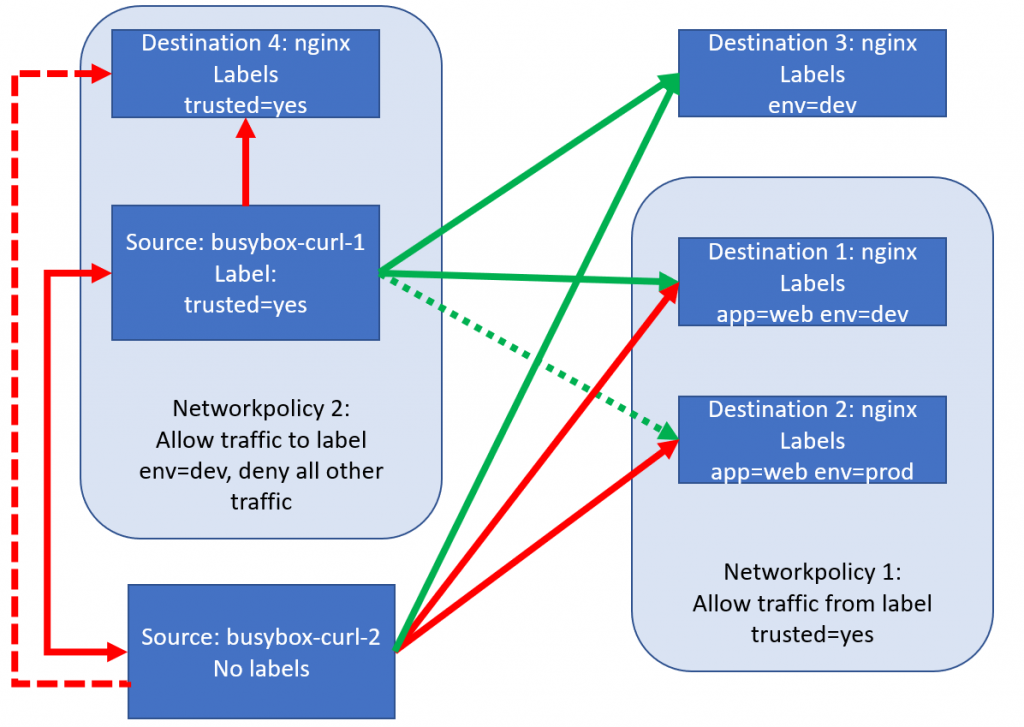

curl *nginxprod* #worksOk, this is starting to make sense now. Let’s finish making sense of this by deploying a couple of new pods: a nginx-pod without the app label, making it a target for our policy2, but not for our policy 1. We’ll also create a nginx pod with the trusted=yes label, so no Ingress policy will apply and it’s not the target of our second policy. Please note, we’ll still be working with the deny all policy at this point, we deleted our policy2.

apiVersion: v1

kind: Pod

metadata:

name: nginx-dev-noapp

labels:

env: "dev"

spec:

containers:

- name: nginx

image: nginx

---

apiVersion: v1

kind: Pod

metadata:

name: nginx-trusted-yes

labels:

trusted: "yes"

spec:

containers:

- name: nginx

image: nginxWe’ll create our new pods, kubectl create -f newpods.yaml and then check some network traffic. At this point, I’m expecting traffic NOT to flow to those pods, as they are not the target of a networkpolicy, while we still have a deny-all in place.

apiVersion: v1

kind: Pod

metadata:

name: nginx-dev-noapp

labels:

env: "dev"

spec:

containers:

- name: nginx

image: nginx

---

apiVersion: v1

kind: Pod

metadata:

name: nginx-trusted-yes

labels:

trusted: "yes"

spec:

containers:

- name: nginx

image: nginxWe’ll create these pods – kubectl create -f newpods.yaml – and then check some network traffic. At this point, I’m expecting traffic NOT to flow to those pods, as they are not the target of a networkpolicy, while we still have a deny-all in place.

kubectl get pods -o wide # again, let's get some ip addresses

kubectl exec -it busybox-curl-1 sh

curl *nginx-prod* #still works, we didn't touch this

curl *nginx-dev* #still works, we didn't touch this

curl *nginx-dev-noapp* #doesn't work, as we don't have our egress set yet

curl *nginx-trusted-yes* #doesn't work, blocked by deny allIf you do the same from busybox-2, you’ll see all traffic fail. As expected due to the default deny-all.

Let’s now apply our second policy – while keeping the default block egress in place. kubectl apply -f policy2.yaml and let’s check some traffic. I’m expecting the first three to succeed, and the last one to fail.

kubectl get pods -o wide # again, let's get some ip addresses

kubectl exec -it busybox-curl-1 sh

curl *nginx-prod* #still works, we didn't touch this

curl *nginx-dev* #still works, we didn't touch this

curl *nginx-dev-noapp* #works now, target of our policy2

curl *nginx-trusted-yes* #doesn't work, blocked by deny all AND by policy2; as this is not part of the app=web policy and policy2 doesn't allow this outbound traffic (only outbound to env=dev)If we now delete the default deny-all policy, we’ll see an interesting pattern in busybox2! kubectl delete -f denyall.yaml

kubectl get pods -o wide # again, let's get some ip addresses

kubectl exec -it busybox-curl-2 sh

curl *nginx-prod* #fails - blocked by policy1

curl *nginx-dev* #fails - blocked by policy1

curl *nginx-dev-noapp* #works, no policy on nginx-dev-noapp and no policy on busybox2

curl *nginx-trusted-yes* #fails, unexpectedly. There is no egress policy on busybox-2 and no ingress on nginx-trusted - so this should just work.

And then I started to doubt myself

I was expecting that last curl to succeed. That is the moment I was starting to suspect something might be wrong with the Azure NetworkPolicy implentation – OR something might be very wrong with me.

I did one final experiment that told me it was not just me. I created a default-deny-all ingress policy, which was supposed to block all ingress, except for the ingress that is allowed. Here is where things got strange – namely, if I created that ingress policy AFTER I created policy1; all traffic got blocked. If I created it BEFORE policy1 (I removed policy1 and reapplied it), then the traffic from policy1 worked again.

apiVersion: networking.k8s.io/v1 kind: NetworkPolicy

metadata:

name: default-deny-in

spec:

podSelector: {}

policyTypes:

- IngressTo verify my beliefs, I created a new test cluster using the Calico plugin – and the Calico NetworkPolicy behaved as I was expecting it to behave; from step 1. The steps here are documented in part 7 of the CKAD series.

I did what was right, and I searched the Github issues for AKS. Apperently, there was one issue open already related to Azure NetworkPolicy (which I encountered in that final step I just described) and I opened a new issue for my issue with ingress/egress mismatching.

Conclusion

I decided to not let my troubleshooting work go to waste. I was writing most of this as I was actually hitting the cluster and learning about NetworkPolicies, and I hope my doubts, gaining clarity and then doubts again make sense. If not, don’t worry – these are basically ramblings of a mad man.