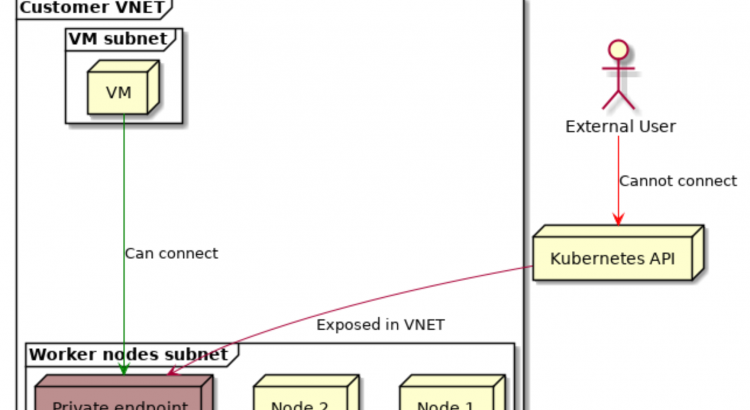

Just a couple days ago, there was an announcement that Azure now supports (in public preview) AKS private clusters. This means that you can now create a cluster, where the API-server is hosted on a private IP.

By default, an AKS cluster is created using a public IP for the kubernetes API server. On that public IP, you can define ip ranges that are authorized to access the API server, but you’re still connecting over the internet.

With this update, your API server will be completely private. This adds an additional security layer, since only machines connected to your VNET will be able to connect to the API server. Machines and users not connected to our VNET won’t be able to access the Kubernetes API.

This is achieved by creating a private endpoint for the Kubernetes API server in our VNET. If you want to learn more about private endpoints, check out my blog post from a couple of weeks ago.

If you want to try this out for yourself, please make sure to check the limitations in the documentation. Since this feature is new, there are some major limitations; such as no support for Azure DevOps integration, and no support to convert existing clusters.

Why won’t we try this out?

Creating a private cluster

First things first, we need to enable this preview in both our AZ CLI tool and on our subscription:

az extension add --name aks-preview

az extension update --name aks-preview

az feature register --name AKSPrivateLinkPreview --namespace Microsoft.ContainerService

#wait a little

az feature list -o table --query "[?contains(name, 'Microsoft.ContainerService/AKSPrivateLinkPreview')].{Name:name,State:properties.state}"

#this should be in status 'Registered'. It took a while in my case.Once the preview feature is registered on your subscription, you need to re-register the Microsoft.ContainerService and Microsoft.Network resource provider:

az provider register --namespace Microsoft.ContainerService

az provider register --namespace Microsoft.NetworkAnd then we can go ahead and create our cluster:

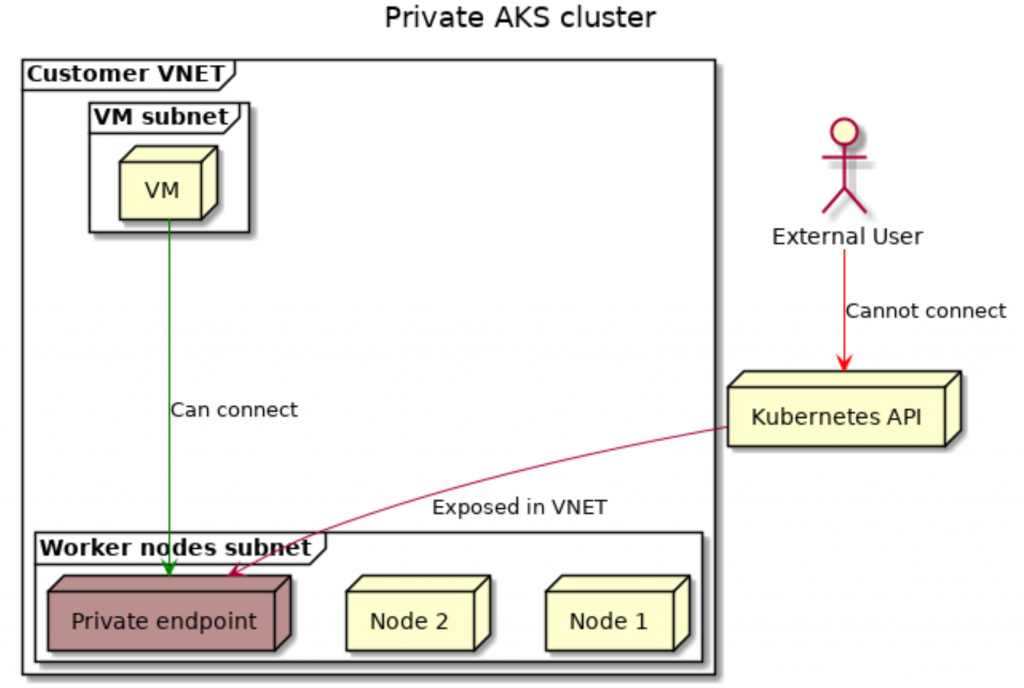

RGNAME=privateaks

LOCATION=westus2

AKSNAME=nfprivateaks

VNETNAME=aksprivate

SUBNET=AKS

az group create -n $RGNAME -l $LOCATION

az network vnet create -g $RGNAME -n $VNETNAME --address-prefix 10.0.0.0/16 \

--subnet-name $SUBNET --subnet-prefix 10.0.0.0/22

SUBNETID=`az network vnet subnet show -n $SUBNET --vnet-name $VNETNAME -g $RGNAME --query id -o tsv`

az aks create \

--resource-group $RGNAME \

--name $AKSNAME \

--load-balancer-sku standard \

--enable-private-cluster \

--network-plugin azure \

--vnet-subnet-id $SUBNETID \

--docker-bridge-address 172.17.0.1/16 \

--dns-service-ip 10.2.0.10 \

--service-cidr 10.2.0.0/24 While your cluster is creating, you can already go ahead and create a VM. You’ll need a VM to access the AKS api, since it’s hidden behind a private endpoint. This time, I’ll be lazy and create it via the portal. I decided to play it safe and created another subnet in our VNET to deploy the VM into.

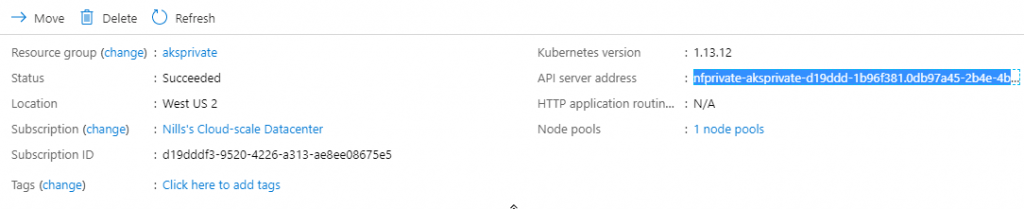

Looking under the covers

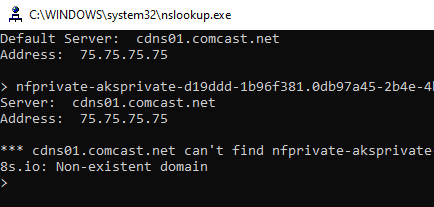

I was curious how all this was implemented, so I decided to have a look under the covers before connecting to my cluster. The first thing I wanted to check is the DNS resolution of the API server address from AKS. I tried an nslookup on my workstation, which didn’t resolve to an address.

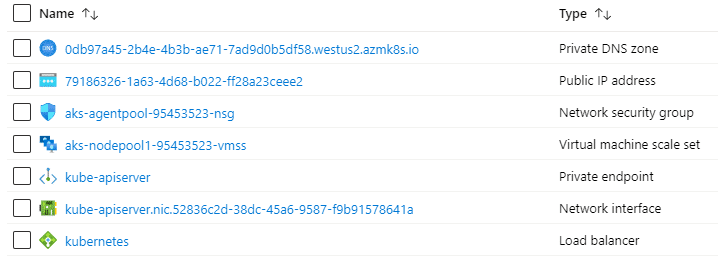

So, let’s have a look at what is deploying in our managed resource group.

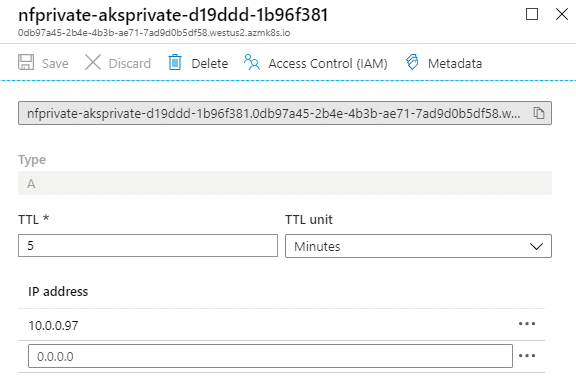

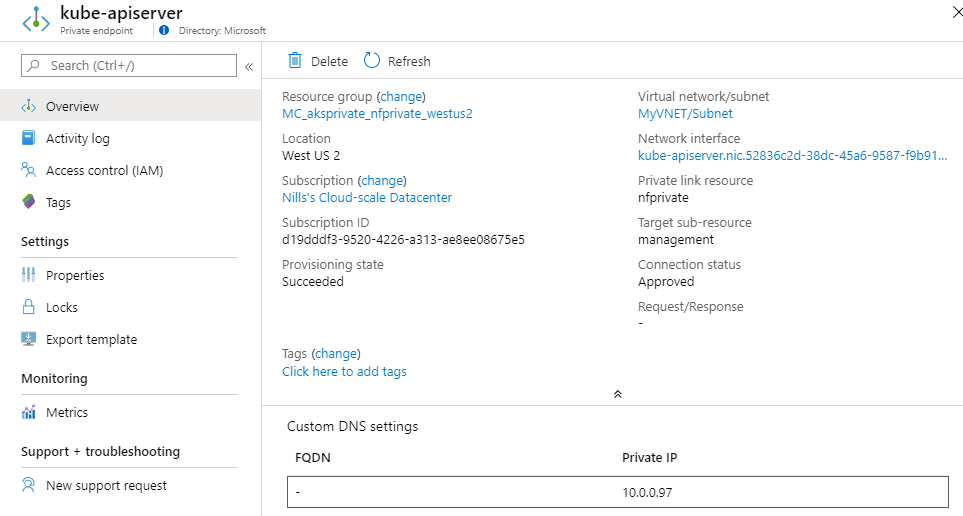

As you can see, a Private DNS zone and a Private endpoint got created. This DNS zone has a single record for now, which is our API server endpoint, pointing to 10.0.0.97.

DNS record in the private zone.

And this DNS record corresponds to our private endpoint:

A final element that peaked my interested is what the ARM object would look like. So I decided to head over to resources.azure.com and have a peak. The ARM object had two references to the face that it was deployed privately:

...

"privateLinkResources": [

{

"id": "/subscriptions/d19dddf3-9520-4226-a313-ae8ee08675e5/resourcegroups/privateaks/providers/Microsoft.ContainerService/managedClusters/nfprivateaks/privateLinkResources/management",

"name": "management",

"type": "Microsoft.ContainerService/managedClusters/privateLinkResources",

"groupId": "management",

"requiredMembers": [

"management"

]

}

],

"apiServerAccessProfile": {

"enablePrivateCluster": true

}

...This means the cluster itself has a link to the privateLinkResource that got created, as well as an option that says: "enablePrivateCluster": true.

So, in conclusion, what we’ve seen under the covers:

- A private DNS zone got created for us,

- A private endpoint got created,

- Public DNS resolution no longer works,

- The ARM object has some additional parameters.

With that understanding, let’s connect to the VM we created in that VNET and see if we can connect privately.

Connecting to a private AKS cluster

Since this is a vanilla VM (I created an Ubuntu 18.04), we’ll need to install the AZ CLI and kubectl. If you don’t have trust issues, you can now install the AZ CLI using a one-line command, and you can then use AZ CLI to install kubectl.

RGNAME=privateaks

AKSNAME=nfprivateaks

curl -sL https://aka.ms/InstallAzureCLIDeb | sudo bash

sudo az aks install-cli

az login

az aks get-credentials -g $RGNAME -n $AKSNAMEThis will load out local kubeconfig file, so we can connect to our cluster. Let’s try a quick kubectl get nodes:

NAME STATUS ROLES AGE VERSION

aks-nodepool1-20153561-vmss000000 Ready agent 2m42s v1.14.8

aks-nodepool1-20153561-vmss000001 Ready agent 5m46s v1.14.8

aks-nodepool1-20153561-vmss000002 Ready agent 3m48s v1.14.8Which gives us our nodes in our cluster! And with that, we have deployed an AKS cluster without a public API server!

Conclusion

It was fairly easy to setup an AKS cluster with a private API server. For now, it only seems to work using the AZ CLI (the feature is only a couple days old).

I learned by looking under the covers that this will create an Azure DNS Private Zone for us, together with a Private Link endpoint.

And with that, we have another tool in our tool belt to build secure applications on top of Azure and Kubernetes.