When a pod in Kubernetes needs to start, the images for the containers in that pod need to be downloaded to the node first. Depending on image sizes, this can take up to a few minutes if you have large container images which might impact your user’s experience.

With the new (currently in preview) feature in AKS called AKS Artifact Streaming you can dramatically speed up the pod start-up time on nodes where images aren’t present yet. Rather than fully download the image before starting the pod, it streams the artifacts so your application can be up and running faster.

In this post, we’ll explore and compare pod start-up times on nodes with slow and fast storage.

For reference, you might want to look at the documentation to learn more about the feature and the limitations of the current preview.

Setting up the Azure Container Registry

First off, we’ll have to set up an Azure Container Registry (ACR) that we’ll use to host our image from. We’ll also upload a large image (about 5GiB) to this registry to have a representative large image to work with.

Let’s start by creating the ACR. This needs to be in the premium tier to support artifcat streaming during the preview:

az group create -n aksstreamtest -l westus3

az acr create -g aksstreamtest -n aksstreamtest --sku PremiumNow, let’s create a Dockerfile that creates a large container image:

FROM alpine:latest

# Add content to increase the image size

RUN dd if=/dev/zero of=/dummy bs=1M count=5000

# Command to keep the container running

CMD ["sleep", "infinity"]And we’ll build this using an ACR task (which I personally think is a great way to build container images from the Azure CLI):

az acr build --image largeimage/test:v1 \

--registry aksstreamtest \

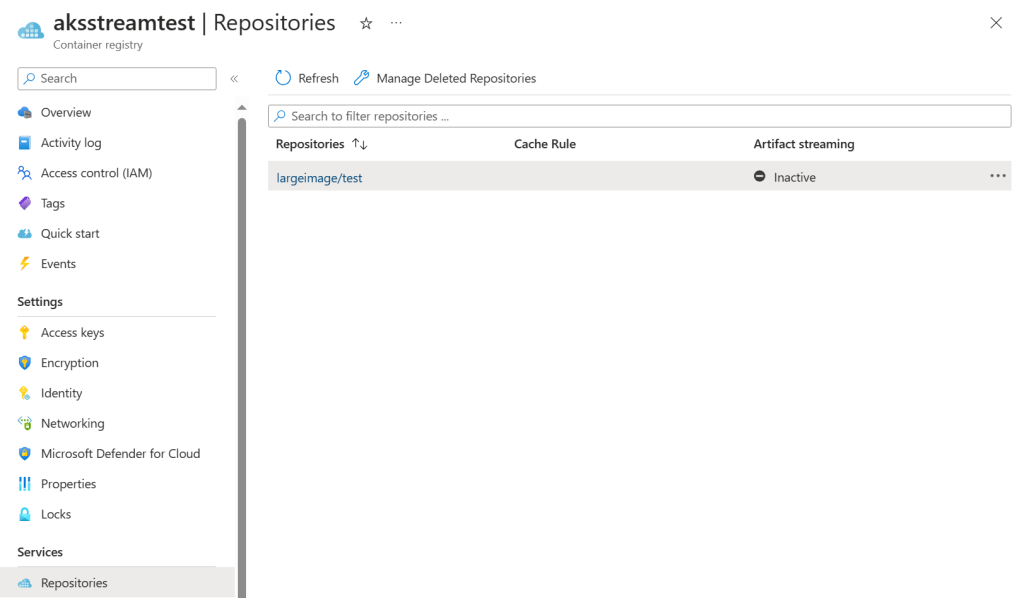

--file Dockerfile . Once that image is built, we can see it in the portal and can also notice that Artifact streaming is Inactive. This inactive refers to automatic conversion to artifact streaming for all versions of this image.

Let’s now enable artifact streaming for this image:

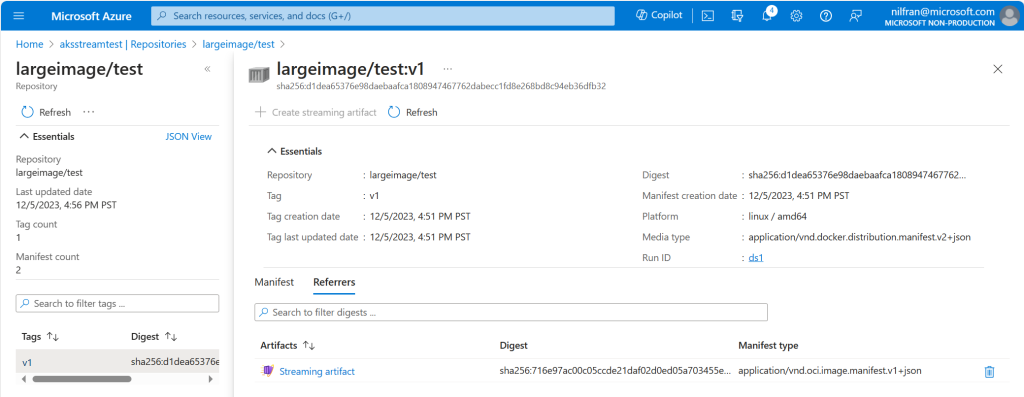

az acr artifact-streaming create -n aksstreamtest --image largeimage/test:v1This will take another few minutes to complete. Once it completes, the status of Artifct Streaming still shows as inactive (given that refers to the automatic conversion), but we can now see that there is a referrer in the image definition:

Preparing our AKS cluster for testing

Let’s now prepare an AKS cluster for testing. First off, you need to enable the preview on your subscription:

az extension add --name aks-preview

az extension update --name aks-preview

az feature register --namespace Microsoft.ContainerService --name ArtifactStreamingPreviewLet’s go ahead and create an AKS cluster with 5 node pools:

- System node pool

- SKU: Standard_D4ds_v5

- slowart

- SKU: Standard_D2_v5

- No local disk and no premium storage.

- Artifact streaming: Enabled

- slownoart:

- SKU: Standard_D2_v5

- No local disk and no premium storage.

- Artifact streaming: Disabled

- fastart:

- SKU: Standard_D4ds_v5

- Local disk

- Artifact streaming: Enabled

- fastnoart:

- SKU: Standard_D4ds_v5

- Local disk

- Artifact streaming: Disabled

And we’ll do this using the following Azure CLI:

az aks create \

--resource-group aksstreamtest \

--name aksstreamtest \

--node-count 1 \

--node-vm-size Standard_D4ds_v5

az aks nodepool add \

--resource-group aksstreamtest \

--cluster-name aksstreamtest \

--name slowart \

--node-count 1 \

--node-vm-size Standard_D2_v5 \

--enable-artifact-streaming

az aks nodepool add \

--resource-group aksstreamtest \

--cluster-name aksstreamtest \

--name slownoart \

--node-count 1 \

--node-vm-size Standard_D2_v5

az aks nodepool add \

--resource-group aksstreamtest \

--cluster-name aksstreamtest \

--name fastart \

--node-count 1 \

--node-vm-size Standard_D4ds_v5 \

--enable-artifact-streaming

az aks nodepool add \

--resource-group aksstreamtest \

--cluster-name aksstreamtest \

--name fastnoart \

--node-count 1 \

--node-vm-size Standard_D4ds_v5

# Get AKS credentials to configure kubectl

az aks get-credentials --resource-group aksstreamtest --name aksstreamtestFinally, we’ll also need to link our ACR to our AKS cluster:

az aks update --attach-acr aksstreamtest -n aksstreamtest -g aksstreamtestLet’s now do some tests and compare/contrast the speed of deployments with and without artifact streaming:

Comparing on nodes with slow storage

First test is going to show the most impressive results. The node has slow storage (standard HDD), which should show a huge benefit for articact streaming. Let’s create the following two deployments in Kubernetes:

apiVersion: apps/v1

kind: Deployment

metadata:

name: slowart

spec:

replicas: 1

selector:

matchLabels:

app: slowart

template:

metadata:

labels:

app: slowart

spec:

containers:

- name: my-5gib-container

image: aksstreamtest.azurecr.io/largeimage/test:v1

nodeSelector:

agentpool: slowart

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: slownoart

spec:

replicas: 1

selector:

matchLabels:

app: slownoart

template:

metadata:

labels:

app: slownoart

spec:

containers:

- name: my-5gib-container

image: aksstreamtest.azurecr.io/largeimage/test:v1

nodeSelector:

agentpool: slownoartWe’ll create both at the same time and watch the pods start:

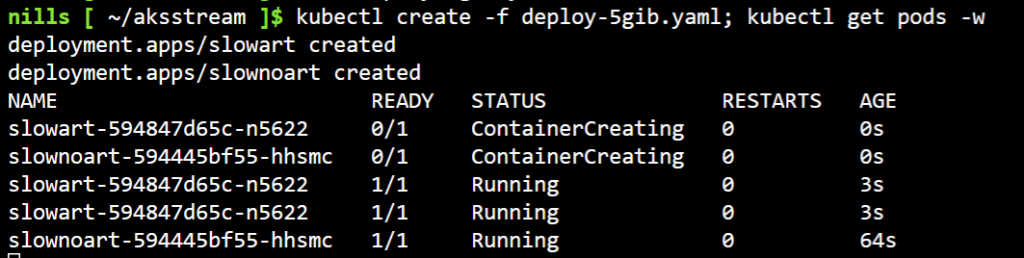

kubectl create -f deploy-5gib.yaml; kubectl get pods -wThis will show us the following results:

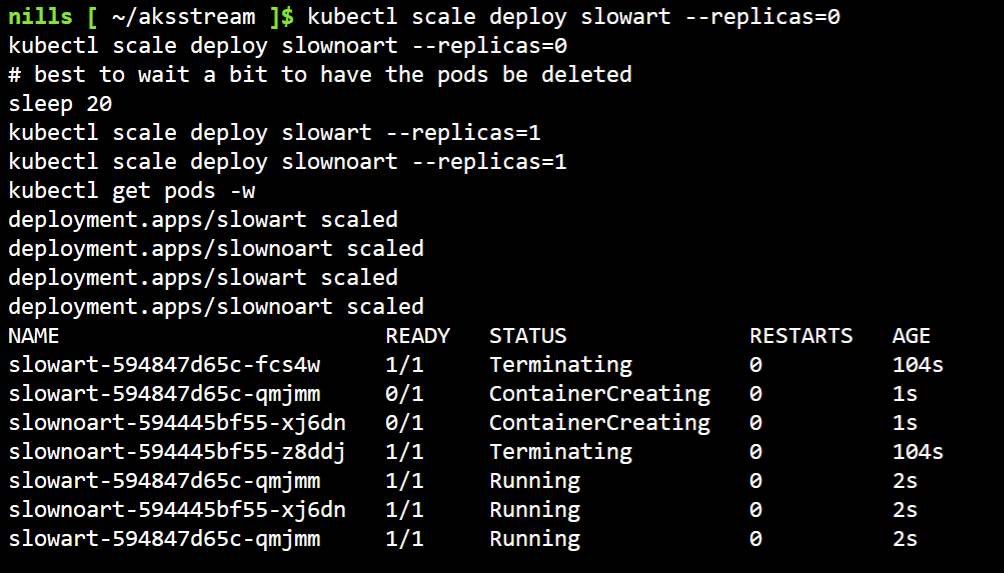

As you can see in the screenshot above, with artifact streaming, the pod was running after 3 seconds; while without artifact streaming it took 64 seconds. Now, please also notice that this is because the image was not present on the node. If we delete both pods and have them be recreated, you’ll see that both pods come at roughly the same time.

kubectl scale deploy slowart --replicas=0

kubectl scale deploy slownoart --replicas=0

# best to wait a bit to have the pods be deleted

sleep 20

kubectl scale deploy slowart --replicas=1

kubectl scale deploy slownoart --replicas=1

kubectl get pods -w

As you can see, once the image was present on the node, both containers started in about 2 seconds. This shows that artifact streaming only helps once the image is not yet present on the node.

Before we move on to the next example, let’s get rid of these deployments:

kubectl delete deploy --allComparing on nodes with fast storage

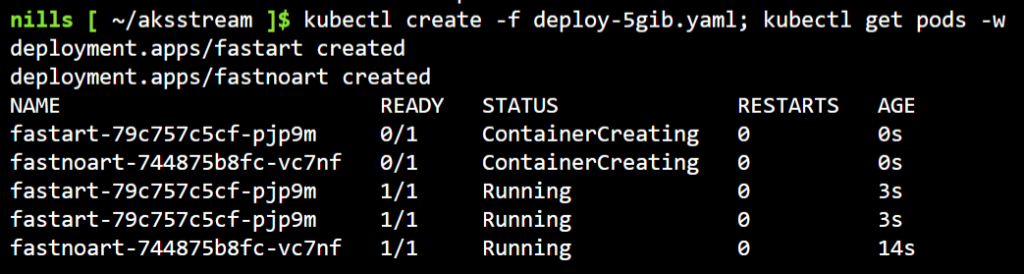

The previous example was a little bit extreme. A two-core machine with a standard HDD attached. However, even in this example where we are using the local temp drive on a Ddsv5 you’ll see the improvement in deployment speed.

Let’s edit our deployments that we’ll deploy:

apiVersion: apps/v1

kind: Deployment

metadata:

name: fastart

spec:

replicas: 1

selector:

matchLabels:

app: fastart

template:

metadata:

labels:

app: fastart

spec:

containers:

- name: my-5gib-container

image: aksstreamtest.azurecr.io/largeimage/test:v1

nodeSelector:

agentpool: fastart

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: fastnoart

spec:

replicas: 1

selector:

matchLabels:

app: fastnoart

template:

metadata:

labels:

app: fastnoart

spec:

containers:

- name: my-5gib-container

image: aksstreamtest.azurecr.io/largeimage/test:v1

nodeSelector:

agentpool: fastnoartOnce we have this, we can delete the previous deployments and create these two new deployments:

This shows you a more representative speed up time I feel. You can still see a difference in start-up time of 3 seconds versus 14 seconds, but it’s less exaggerated than the 3 sec versus 64 sec from earlier.

Again, let’s remove the deployments

kubectl delete deploy --allFinal test: is there a difference in multiple containers?

There is a final test I wanted to run, and while I’m typing this I actually don’t know the outcome yet. In the documentation I read the following statement:

With Artifact Streaming, pod start-up becomes concurrent, whereas without it, pods start in serial.

Azure docs

I was curious if this would have an effect on pod start up times, with let’s say 10 replicas on the same node.

To start, we need to remove the image from the nodes. I am sure there’s better ways to do this, but I decided to brute force it and scale the node pools to 0 nodes, and then back to 1 node. That way we’d have clean nodes.

az aks nodepool scale \

--resource-group aksstreamtest \

--cluster-name aksstreamtest \

--name fastart \

--node-count 0

az aks nodepool scale \

--resource-group aksstreamtest \

--cluster-name aksstreamtest \

--name fastnoart \

--node-count 0

az aks nodepool scale \

--resource-group aksstreamtest \

--cluster-name aksstreamtest \

--name fastart \

--node-count 1

az aks nodepool scale \

--resource-group aksstreamtest \

--cluster-name aksstreamtest \

--name fastnoart \

--node-count 1 Let’s also update the deployment file to now create 10 pods rather than just 1. Also, this time I’ll do the tests 1 by 1, to get clearer output from the kubectl get pods command. Let’s start with option leveraging artifact streaming:

apiVersion: apps/v1

kind: Deployment

metadata:

name: fastart

spec:

replicas: 10

selector:

matchLabels:

app: fastart

template:

metadata:

labels:

app: fastart

spec:

containers:

- name: my-5gib-container

image: aksstreamtest.azurecr.io/largeimage/test:v1

nodeSelector:

agentpool: fastart

Let’s create this and see how fast the last container comes online:

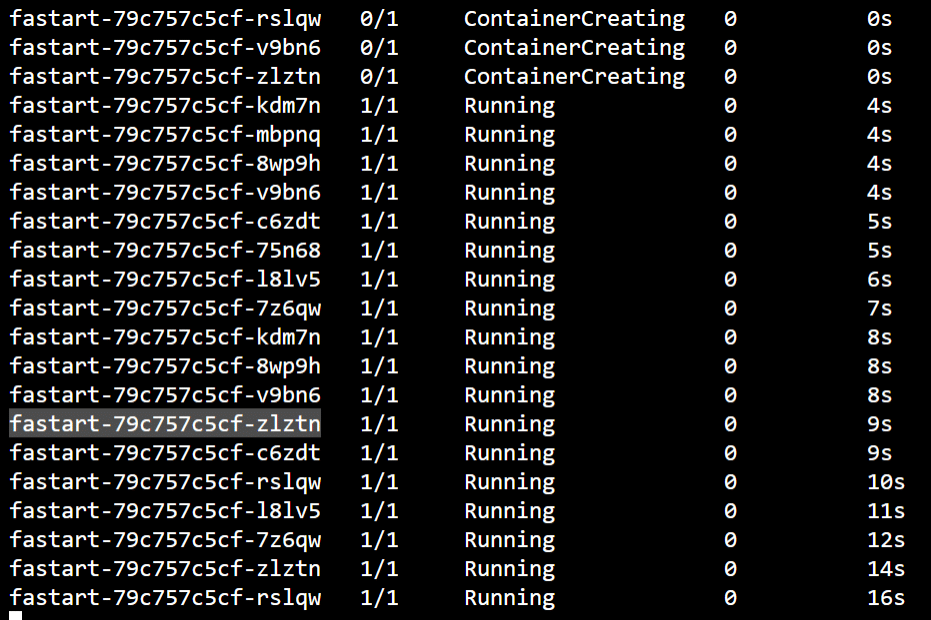

kubectl create -f deploy-5gib.yaml; kubectl get pods -wThe output of the get pods was a bit repetitive, actually showing duplicate running pods:

But, if you’re reading this the same way I am, the 10th pod was running after 9 seconds. Let’s delete this deployment and then create the new one:

kubectl delete deploy --allFor the new deployment, we’ll need to deploy to the fastnoart node pool:

apiVersion: apps/v1

kind: Deployment

metadata:

name: fastnoart

spec:

replicas: 10

selector:

matchLabels:

app: fastnoart

template:

metadata:

labels:

app: fastnoart

spec:

containers:

- name: my-5gib-container

image: aksstreamtest.azurecr.io/largeimage/test:v1

nodeSelector:

agentpool: fastnoart

Let’s deploy and see how long this takes:

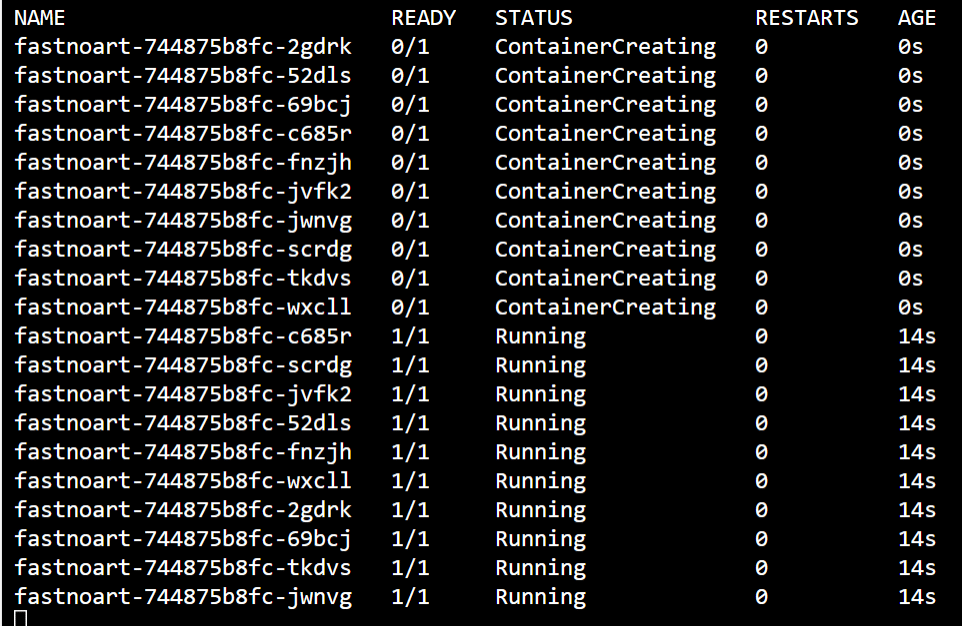

kubectl create -f deploy-5gib.yaml; kubectl get pods -wNo duplicates in the output here:

Surprisingly, once the image was present on the node all 10 containers got created almost immediately, versus a little bit of spread when using artifact streaming.

I don’t want to read too much into these results, but I would recommend you testing out your specific scaling patterns with both a single pod on a host versus multiple pods on multiple hosts.

Summary

In this post we explored ACR and AKS artifact streaming. We saw the impact of the feature on both nodes with slower storage and faster storage, and saw that the feature had a positive impact on both scenarios when the image wasn’t present on the node yet. However, we did see that once the image is present on the node that artifact streaming no longer had an impact on pod start time.