I am doing some work with a customer around mounting Azure Files using NFS in an AKS cluster. In this blog post, I’m summarizing how to achieve this!

In many applications, you need to have access to some shared files. There’s a couple of ways of setting this up. In Linux a common approach to accessing shared files is using NFS. NFS or Network File System, is a specification on how to access a remote file system of the network.

Azure Files is a managed file share service in Azure. Initially, the service only supported CIFS/SMB as a protocol to mount/access the files. CIFS/SMB is similar to NFS, but it’s a different protocol. To make Azure Files more friendly to Linux users, recently a preview for Azure Files exposing an NFS endpoint was announced.

This feature is in preview in a couple of regions right now, with a couple of limitations. An important limitation is that it is (for now) only supported on premium Azure Files and only on new storage accounts. For a little more details on the limitations, please refer to the documentation.

As a final note before we get started, you need to use a private connectivity method to reach your NFS Azure Files. That means you either need to use a private endpoint or a service endpoint. In this post, we’ll set up Azure Files with NFS using a private endpoint.

Setting up Azure Files with NFS

The script to deploy the Azure Files with NFS the way I created it is also available on GitHub. It doesn’t include the feature registration.

First, we need to register the preview feature:

az feature register --name AllowNfsFileShares --namespace Microsoft.Storage

az provider register --namespace Microsoft.StorageAs per usual, it takes a while for this to register. To get the state, use the following. For me, it took about 15 minutes to register.

az feature show --name AllowNfsFileShares --namespace Microsoft.Storage --query properties.stateWhen we’re ready, we can create the network we need for this work:

RGNAME=nfsaks

VNETNAME=nfsaks

az group create -n $RGNAME -l westus2

az network vnet create -g $RGNAME -n $VNETNAME \

--address-prefixes 10.0.0.0/16 --subnet-name aks \

--subnet-prefixes 10.0.0.0/24

az network vnet subnet create -g $RGNAME --vnet-name $VNETNAME \

-n NFS --address-prefixes 10.0.1.0/24 Next, let’s create the storage account, turn of HTTPS only, and create the share:

STACC=nfnfsaks

az storage account create \

--name $STACC \

--resource-group $RGNAME \

--location westus2 \

--sku Premium_LRS \

--kind FileStorage

az storage account update --https-only false \

--name $STACC --resource-group $RGNAME

az storage share-rm create \

--storage-account $STACC \

--enabled-protocol NFS \

--root-squash RootSquash \

--name "akstest" \

--quota 100Then next, we need to set up a private endpoint for this. We’ll do that this way:

SUBNETID=`az network vnet subnet show \

--resource-group $RGNAME \

--vnet-name $VNETNAME \

--name NFS \

--query "id" -o tsv `

STACCID=`az storage account show \

--resource-group $RGNAME \

--name $STACC \

--query "id" -o tsv `

az network vnet subnet update \

--ids $SUBNETID\

--disable-private-endpoint-network-policies

ENDPOINT=`az network private-endpoint create \

--resource-group $RGNAME \

--name "$STACC-PrivateEndpoint" \

--location westus2 \

--subnet $SUBNETID \

--private-connection-resource-id $STACCID\

--group-id "file" \

--connection-name "$STACC-Connection" \

--query "id" -o tsv `Once we have the private endpoint created, we also need to create a DNS zone and set up an A-record for our storage account:

DNSZONENAME="privatelink.file.core.windows.net"

VNETID=`az network vnet show \

--resource-group $RGNAME \

--name $VNETNAME \

--query "id" -o tsv`

dnsZone=`az network private-dns zone create \

--resource-group $RGNAME \

--name $DNSZONENAME \

--query "id" -o tsv`

az network private-dns link vnet create \

--resource-group $RGNAME \

--zone-name $DNSZONENAME \

--name "$VNETNAME-DnsLink" \

--virtual-network $VNETID \

--registration-enabled false

ENDPOINTNIC=`az network private-endpoint show \

--ids $ENDPOINT \

--query "networkInterfaces[0].id" -o tsv `

ENDPOINTIP=`az network nic show \

--ids $ENDPOINTNIC \

--query "ipConfigurations[0].privateIpAddress" -o tsv `

az network private-dns record-set a create \

--resource-group $RGNAME \

--zone-name $DNSZONENAME \

--name $STACC

az network private-dns record-set a add-record \

--resource-group $RGNAME \

--zone-name $DNSZONENAME \

--record-set-name $STACC \

--ipv4-address $ENDPOINTIP Testing NFS with a VM

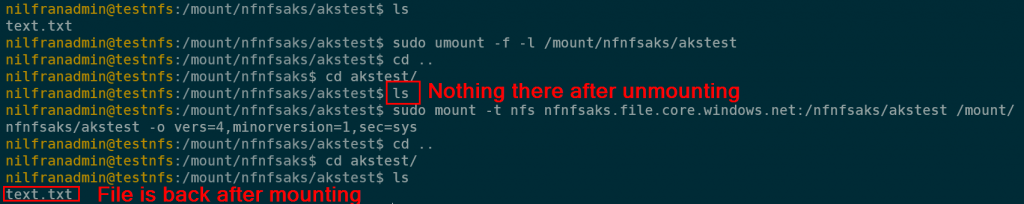

Before testing things out in AKS and risking issues with Kubernetes, I wanted to test out NFS using a regular VM. I created a small Ubuntu VM in the AKS subnet:

AKSSUBNETID=`az network vnet subnet show \

--resource-group $RGNAME \

--vnet-name $VNETNAME \

--name AKS\

--query "id" -o tsv `

az vm create -g $RGNAME -n testnfs \

--subnet $AKSSUBNETID \

--nsg-rule SSH \

--ssh-key-values @~/.ssh/id_rsa.pub \

--image ubuntuLTSOnce the VM is created, SSH into it and use the following commands to verify that you can mount NFS:

sudo apt update && sudo apt install nfs-common -y

sudo mkdir -p /mount/nfnfsaks/akstest

sudo mount -t nfs nfnfsaks.file.core.windows.net:/nfnfsaks/akstest /mount/nfnfsaks/akstest -o vers=4,minorversion=1,sec=sysThis works, and to prove it I created a file, unmounted, remounted and the file was back:

Now that we’re sure it works, let’s get rid of the VM and test this out in AKS.

az vm delete -g $RGNAME -n testnfs --yesMounting NFS in AKS

Let’s first create a new cluster in the AKS subnet we pre-created before:

AKSSUBNETID=`az network vnet subnet show \

--resource-group $RGNAME \

--vnet-name $VNETNAME \

--name AKS\

--query "id" -o tsv `

az aks create -g $RGNAME -n nfstest \

--vnet-subnet-id $AKSSUBNETID \

--service-cidr 10.1.0.0/16 \

--dns-service-ip 10.1.0.10 \

--network-plugin kubenet

az aks get-credentials -g $RGNAME -n nfstestOnce we have the cluster created, we can go ahead and create a pod that mounts our NFS share. I used a very lazy approach to mounting NFS, just mounting NFS directly in the pod, not by creating a volume.

apiVersion: v1

kind: Pod

metadata:

name: myapp

spec:

containers:

- name: myapp

image: busybox

command: ["/bin/sh", "-ec", "sleep 1000"]

volumeMounts:

- name: nfs

mountPath: /var/nfs

volumes:

- name: nfs

nfs:

server: nfnfsaks.file.core.windows.net

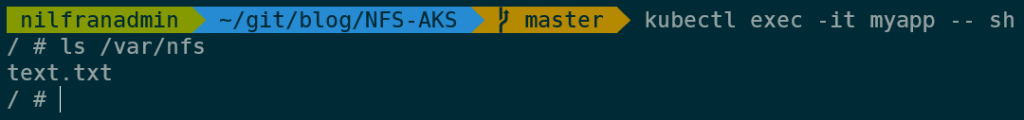

path: "/nfnfsaks/akstest"We can create this pod using kubectl create -f pod-with-nfs.yaml.

Once we have the pod, we can exec into it, and see what’s available on the file share. As expected, we see that the file we created earlier is there on the mount point.

And that’s how you can mount Azure Files over NFS in an Azure Kubernetes cluster.

Summary

In this blog post, we explored mounting Azure Files via NFS in an AKS cluster. That worked pretty seamlessly. Mounting Azure Files took less time than setting it up since it requires a private endpoint which requires a little bit of work. But once the setup was done, this felt very seamless.

I’m personally looking forward to this being enabled on standard performance Azure Files accounts as well. But while it’s in preview, this worked like a charm for me.